Applied AI/ML from MIT certificate program

Applied AI/ML from MIT certificate program

Applied AI/ML from MIT certificate program

Applied AI/ML from MIT certificate program

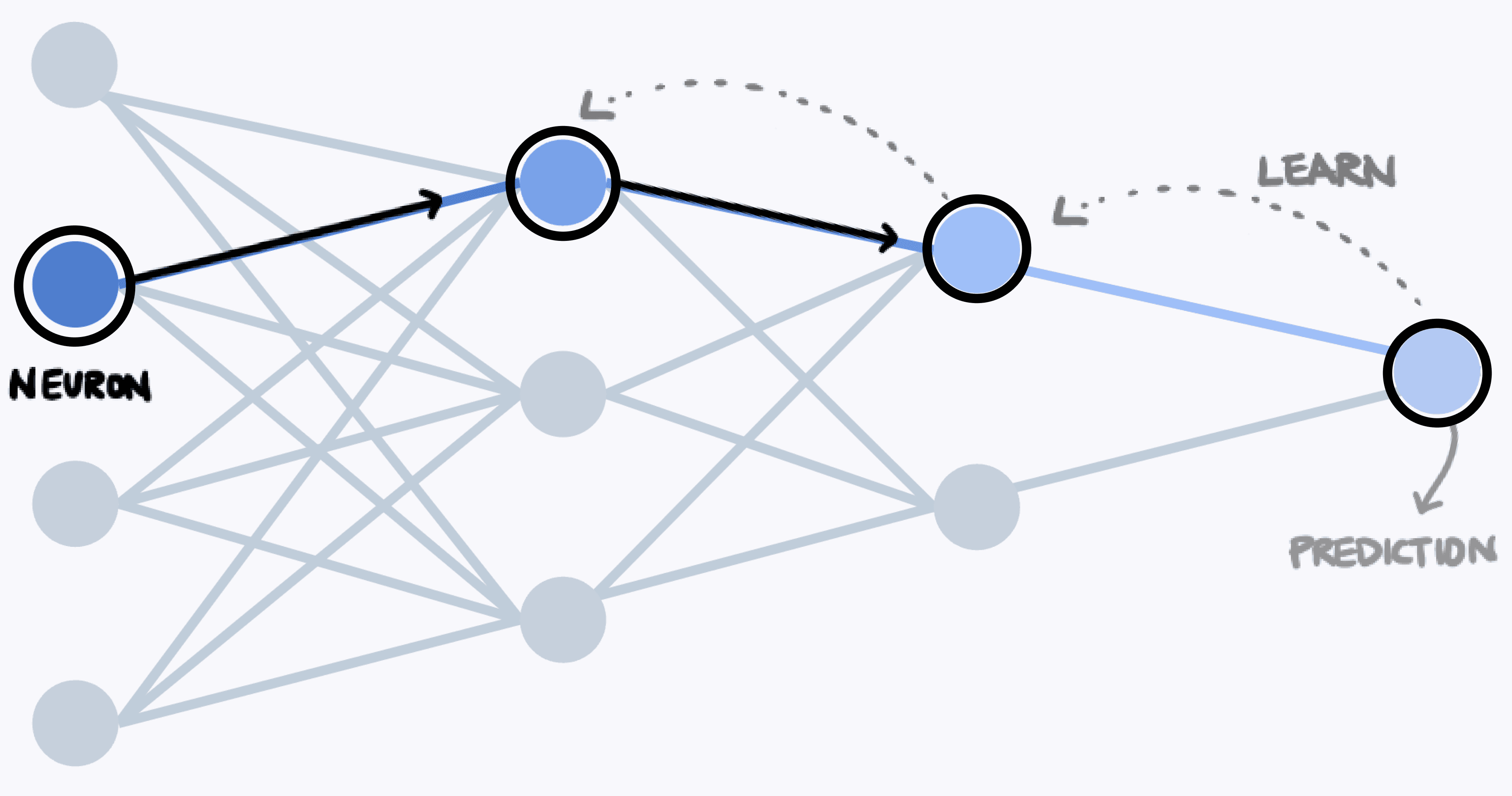

Integrating insights from the 2024 MIT No-Code AI and Machine Learning certificate program, these projects explore how AI agents and ML models can elevate clinician workflows, cybersecurity, and customer feedback analysis, with direct applications in product design.

Integrating insights from the 2024 MIT No-Code AI and Machine Learning certificate program, these projects explore how AI agents and ML models can elevate clinician workflows, cybersecurity, and customer feedback analysis, with direct applications in product design.

Integrating insights from the 2024 MIT No-Code AI and Machine Learning certificate program, these projects explore how AI agents and ML models can elevate clinician workflows, cybersecurity, and customer feedback analysis, with direct applications in product design.

Based on the 2024 MIT No-Code AI and Machine Learning certificate program, explored how AI agents and ML models can elevate clinician workflows, cybersecurity, and customer feedback analysis, with direct applications in product.

AI agents enhancing clinician workflows

AI agents enhancing clinician workflows

AI agents enhancing clinician workflows

AI-driven workflows address administrative burdens that detract from patient care, automating scheduling and documentation to reduce clinician fatigue. Disclaimer: All concepts on this page are my personal projects from MIT training; no affiliation with company work.

AI-driven workflows address administrative burdens that detract from patient care, automating scheduling and documentation to reduce clinician fatigue. Disclaimer: All concepts on this page are my personal projects from MIT training; no affiliation with company work.

AI-driven workflows address administrative burdens that detract from patient care, automating scheduling and documentation to reduce clinician fatigue. Disclaimer: All concepts on this page are my personal projects from MIT education; no affiliation with company work.

AI agents enhancing clinician workflows

AI-driven workflows address administrative burdens, automating scheduling and documentation. Disclaimer: All concepts on this page are my personal projects from MIT education; no affiliation with company work.

1

1

1

1

Adaptive AI scheduling for clinicians

Adaptive AI scheduling for clinicians

Adaptive AI scheduling for clinicians

Adaptive AI scheduling for clinicians

Multiple AI assistants dynamically adjust clinician schedules, accommodating personal needs, while ensuring efficient clinical prioritization and workflow.

Multiple AI assistants dynamically adjust clinician schedules, accommodating personal needs, while ensuring efficient clinical prioritization and workflow.

Multiple AI assistants dynamically adjust clinician schedules, accommodating personal needs, while ensuring efficient clinical prioritization and workflow.

Multiple AI assistants dynamically adjust clinician schedules, accommodating personal needs, while ensuring efficient clinical prioritization and workflow.

Highlights

Highlights

Highlights

Dynamic schedule adjustment

Dynamic schedule adjustment

Dynamic schedule adjustment

AI instantly adjusts schedules based on real-time data.

AI instantly adjusts schedules based on real-time data.

AI instantly adjusts schedules based on real-time data.

Task sequencing and prioritization

Task sequencing and prioritization

Task sequencing and prioritization

Efficiently sequences tasks, prioritizes urgencies, and time-boxes work.

Efficiently sequences tasks, prioritizes urgencies, and time-boxes work.

Efficiently sequences tasks, prioritizes urgencies, and time-boxes work.

Collaborative AI coordination

Collaborative AI coordination

Collaborative AI coordination

Multiple AI agents optimize workflow seamlessly.

Multiple AI agents optimize workflow seamlessly.

Multiple AI agents optimize workflow seamlessly.

Key AI/ML capabilities needed

Key AI/ML capabilities needed

Key AI/ML capabilities needed

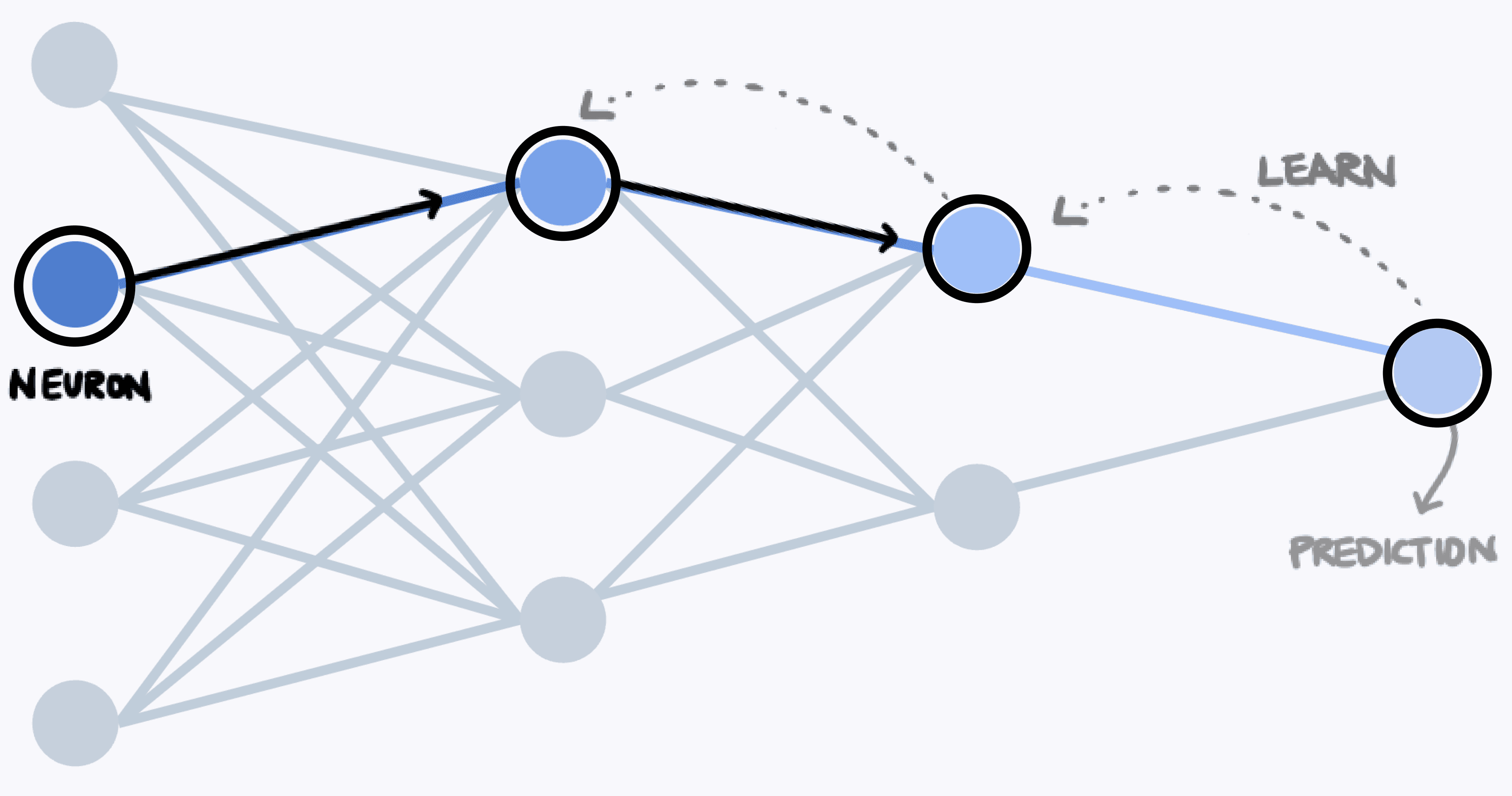

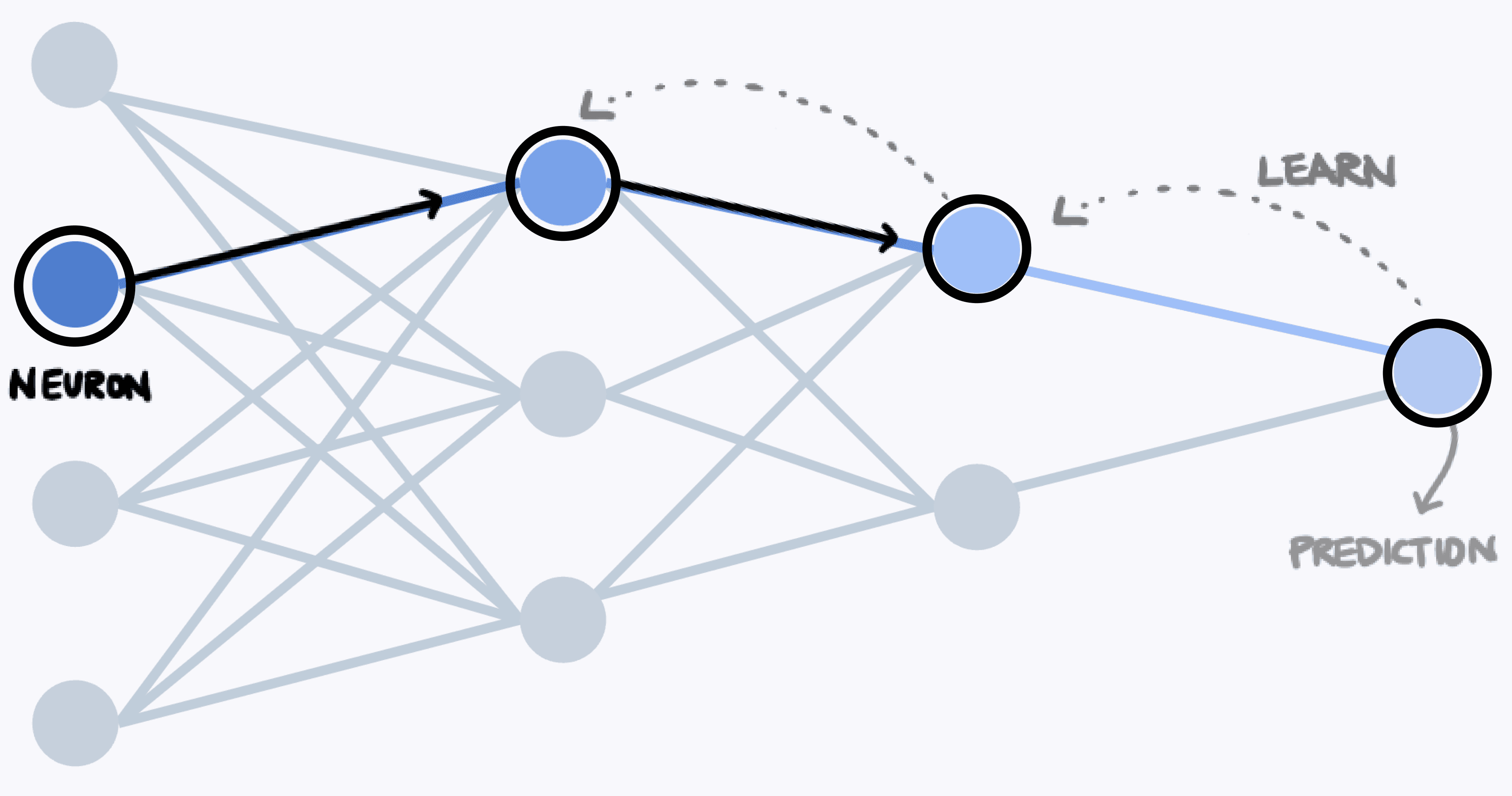

Reinforcement learning (RL)

Reinforcement learning (RL)

Reinforcement learning (RL)

Optimizes task sequencing using real-time data.

Optimizes task sequencing using real-time data.

Optimizes task sequencing using real-time data.

ML & optimization algorithms

ML & optimization algorithms

ML & optimization algorithms

Combines machine learning predictions with optimization techniques.

Combines machine learning predictions with optimization techniques.

Combines machine learning predictions with optimization techniques.

Natural language processing (NLP)

Natural language processing (NLP)

Natural language processing (NLP)

Interprets clinician inputs for schedule adjustments.

Interprets clinician inputs for schedule adjustments.

Interprets clinician inputs for schedule adjustments.

2

2

2

2

AI-augmented communication and documentation

AI-augmented communication and documentation

AI-augmented communication and documentation

AI-augmented communication and documentation

AI assistants help refine patient messages and clinical notes, streamlining communication and reducing clinician workload.

AI assistants help refine patient messages and clinical notes, streamlining communication and reducing clinician workload.

AI assistants help refine patient messages and clinical notes, streamlining communication and reducing clinician workload.

AI assistants help refine patient messages and clinical notes, streamlining communication and reducing clinician workload.

Highlights

Highlights

Highlights

Automated message drafting

Automated message drafting

Automated message drafting

AI generates message drafts matching clinician tone.

AI generates message drafts matching clinician tone.

AI generates message drafts matching clinician tone.

Efficient documentation

Efficient documentation

Efficient documentation

Automatically drafts standardized (e.g., SOAP) clinical notes to reduce administrative burden.

Automatically drafts standardized (e.g., SOAP) clinical notes to reduce administrative burden.

Automatically drafts standardized (e.g., SOAP) clinical notes to reduce administrative burden.

Integrated patient data

Integrated patient data

Integrated patient data

Summarizes relevant patient information within the interface.

Summarizes relevant patient information within the interface.

Summarizes relevant patient information within the interface.

Key AI/ML capabilities needed

Key AI/ML capabilities needed

Key AI/ML capabilities needed

Advanced NLP & generation

Advanced NLP & generation

Advanced NLP & generation

Uses transformers to create coherent, contextually aware messages and notes.

Uses transformers to create coherent, contextually aware messages and notes.

Uses transformers to create coherent, contextually aware messages and notes.

Context-aware summarization

Context-aware summarization

Context-aware summarization

Summarizes relevant patient history and recent interactions for efficient documentation.

Summarizes relevant patient history and recent interactions for efficient documentation.

Summarizes relevant patient history and recent interactions for efficient documentation.

Personalized language models

Personalized language models

Personalized language models

Fine-tunes AI outputs to match individual clinician styles, enhancing authenticity.

Fine-tunes AI outputs to match individual clinician styles, enhancing authenticity.

Fine-tunes AI outputs to match individual clinician styles, enhancing authenticity.

Highlights

Automated message drafting

AI generates message drafts matching clinician tone.

Integrated patient data

Summarizes relevant patient information within the interface.

Efficient documentation

Automatically drafts standardized (e.g., SOAP) clinical notes to reduce administrative burden.

Key AI/ML capabilities needed

Advanced NLP & generation

Uses transformers to create coherent, contextually aware messages and notes.

Context-aware summarization

Summarizes relevant patient history and recent interactions for efficient documentation.

Personalized language models

Fine-tunes AI outputs to match individual clinician styles, enhancing authenticity.

3

3

3

3

Intelligent data verification and decision support

Intelligent data verification and decision support

Intelligent data verification and decision support

Intelligent data verification and decision support

AI assists clinicians in verifying patient data, enhancing decision-making accuracy while incorporating restorative breaks for well-being.

AI assists clinicians in verifying patient data, enhancing decision-making accuracy while incorporating restorative breaks for well-being.

AI assists clinicians in verifying patient data, enhancing decision-making accuracy while incorporating restorative breaks for well-being.

AI assists clinicians in verifying patient data, enhancing decision-making accuracy while incorporating restorative breaks for well-being.

Highlights

Highlights

Highlights

Real-time data retrieval

Real-time data retrieval

Real-time data retrieval

AI surfaces relevant patient data instantly.

AI surfaces relevant patient data instantly.

AI surfaces relevant patient data instantly.

Interactive verification

Interactive verification

Interactive verification

Clinicians confirm and update data through AI collaboration.

Clinicians confirm and update data through AI collaboration.

Clinicians confirm and update data through AI collaboration.

Decision insights

Decision insights

Decision insights

Provides insights for informed clinical decisions.

Provides insights for informed clinical decisions.

Provides insights for informed clinical decisions.

Key AI/ML capabilities needed

Key AI/ML capabilities needed

Key AI/ML capabilities needed

Retrieval-augmented generation (RAG)

Retrieval-augmented generation (RAG)

Retrieval-augmented generation (RAG)

Combines data retrieval with transformers to improve decision accuracy.

Combines data retrieval with transformers to improve decision accuracy.

Combines data retrieval with transformers to improve decision accuracy.

Explainable AI (XAI)

Explainable AI (XAI)

Explainable AI (XAI)

Offers transparent reasoning behind data suggestions to build clinician trust.

Offers transparent reasoning behind data suggestions to build clinician trust.

Offers transparent reasoning behind data suggestions to build clinician trust.

User state modeling

User state modeling

User state modeling

Monitors clinician stress and workload levels to dynamically adjust schedules.

Monitors clinician stress and workload levels to dynamically adjust schedules.

Monitors clinician stress and workload levels to dynamically adjust schedules.

Highlights

Real-time data retrieval

AI surfaces relevant patient data instantly.

Decision insights

Provides insights for informed clinical decisions.

Interactive verification

Clinicians confirm and update data through AI collaboration.

Key AI/ML capabilities needed

Retrieval-augmented generation (RAG)

Combines data retrieval with transformers to improve decision accuracy.

Explainable AI (XAI)

Offers transparent reasoning behind data suggestions to build clinician trust.

User state modeling

Monitors clinician stress and workload levels to dynamically adjust schedules.

4

4

4

4

AI-driven patient care planning

AI-driven patient care planning

AI-driven patient care planning

AI-driven patient care planning

AI creates temporary patient clusters / patient groupings, allowing clinicians to efficiently deliver customized advice to many patients with minimal effort.

AI creates temporary patient clusters / patient groupings, allowing clinicians to efficiently deliver customized advice to many patients with minimal effort.

AI creates temporary patient clusters / patient groupings, allowing clinicians to efficiently deliver customized advice to many patients with minimal effort.

AI creates temporary patient clusters / patient groupings, allowing clinicians to efficiently deliver customized advice to many patients with minimal effort.

Highlights

Highlights

Highlights

Ephemeral patient clustering

Ephemeral patient clustering

Ephemeral patient clustering

AI forms temporary groups based on dietary and medical profiles.

AI forms temporary groups based on dietary and medical profiles.

AI forms temporary groups based on dietary and medical profiles.

Bulk nutrition plan generation

Bulk nutrition plan generation

Bulk nutrition plan generation

Creates tailored nutrition advice for entire patient groups with a simple action.

Creates tailored nutrition advice for entire patient groups with a simple action.

Creates tailored nutrition advice for entire patient groups with a simple action.

Dynamic task creation

Dynamic task creation

Dynamic task creation

Proactively creates and sequences follow-up tasks based on clinician and patient needs.

Proactively creates and sequences follow-up tasks based on clinician and patient needs.

Proactively creates and sequences follow-up tasks based on clinician and patient needs.

Key AI/ML capabilities needed

Key AI/ML capabilities needed

Key AI/ML capabilities needed

Dynamic clustering

Dynamic clustering

Dynamic clustering

Utilizes algorithms to create and dissolve patient groups based on current needs.

Utilizes algorithms to create and dissolve patient groups based on current needs.

Utilizes algorithms to create and dissolve patient groups based on current needs.

Batch recommendations

Batch recommendations

Batch recommendations

Generates personalized nutrition plans for entire clusters efficiently.

Generates personalized nutrition plans for entire clusters efficiently.

Recommends personalized care plans factoring in medical history and lifestyle.

Chained task automation

Chained task automation

Chained task automation

Automatically creates follow-up tasks based on ongoing patient interactions.

Automatically creates follow-up tasks based on ongoing patient interactions.

Automatically creates follow-up tasks based on ongoing patient interactions.

Highlights

Ephemeral patient clustering

AI forms temporary groups based on dietary and medical profiles.

Dynamic task creation

Proactively creates and sequences follow-up tasks based on clinician and patient needs.

Bulk nutrition plan generation

Creates tailored nutrition advice for entire patient groups with a simple action.

Key AI/ML capabilities needed

Revenue loss from booking cancellations

Utilizes algorithms to create and dissolve patient groups based on current needs.

Batch recommendations

Generates personalized nutrition plans for entire clusters efficiently.

Chained task automation

Automatically creates follow-up tasks based on ongoing patient interactions.

Applied AI and machine learning case studies

Applied AI and machine learning case studies

Applied AI and machine learning case studies

Applied AI and machine learning case studies

Practical case studies from MIT's AI program showcase solutions in decision systems, unstructured data analysis, and prompt engineering, tackling business challenges with predictive analytics and automation.

Practical case studies from MIT's AI program showcase solutions in decision systems, unstructured data analysis, and prompt engineering, tackling business challenges with predictive analytics and automation.

Practical case studies from MIT's AI program showcase solutions in decision systems, unstructured data analysis, and prompt engineering, tackling business challenges with predictive analytics and automation.

Practical case studies from MIT's AI program showcase solutions in decision systems, unstructured data analysis, and prompt engineering, tackling business challenges with predictive analytics and automation.

1

1

1

1

Predicting hotel booking cancellations with machine learning

Predicting hotel booking cancellations with machine learning

Predicting hotel booking cancellations with machine learning

Predicting hotel booking cancellations with machine learning

EDA - Bivariate analysis

1

Lead time: 50% of booking cancellations occur within 3 months.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Model training

2

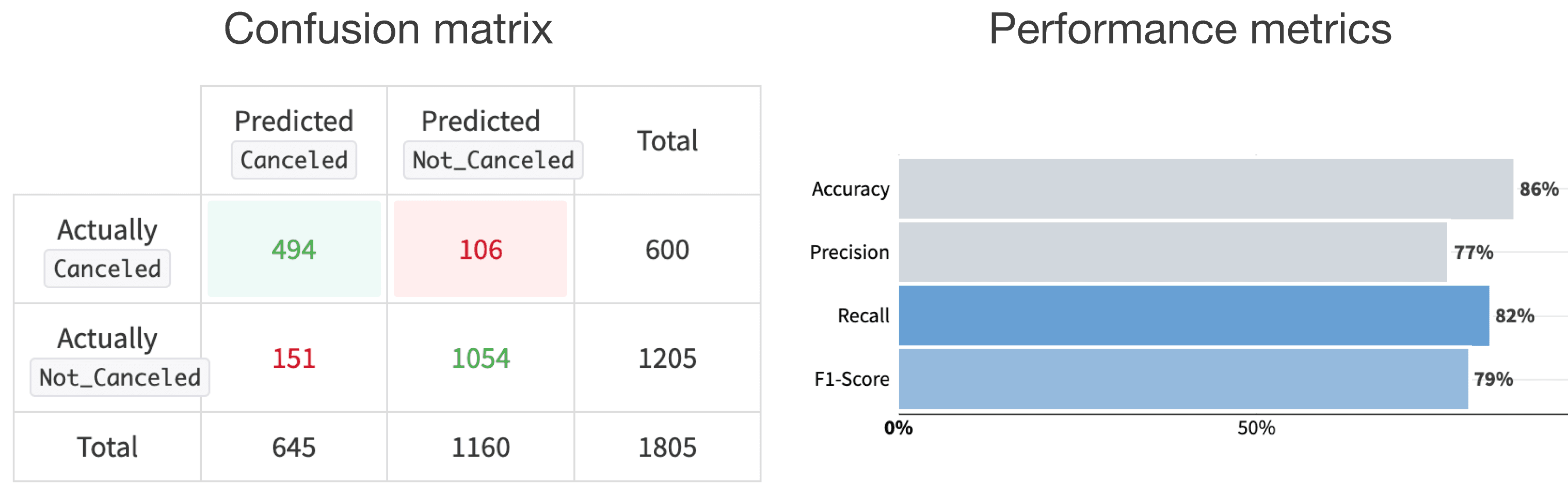

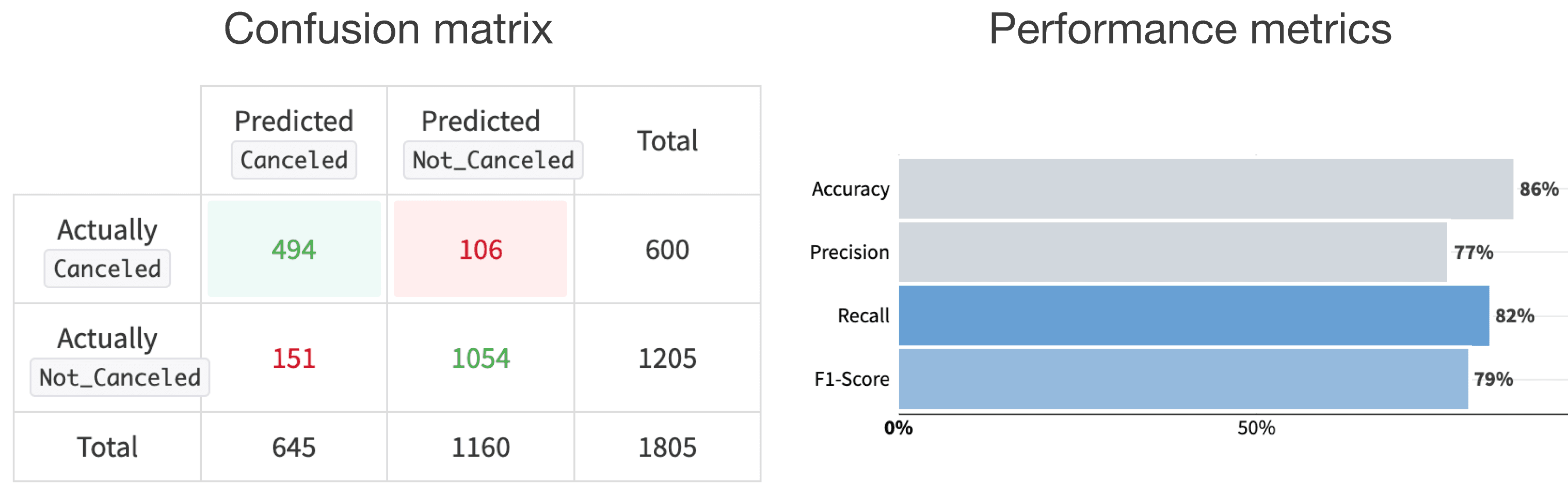

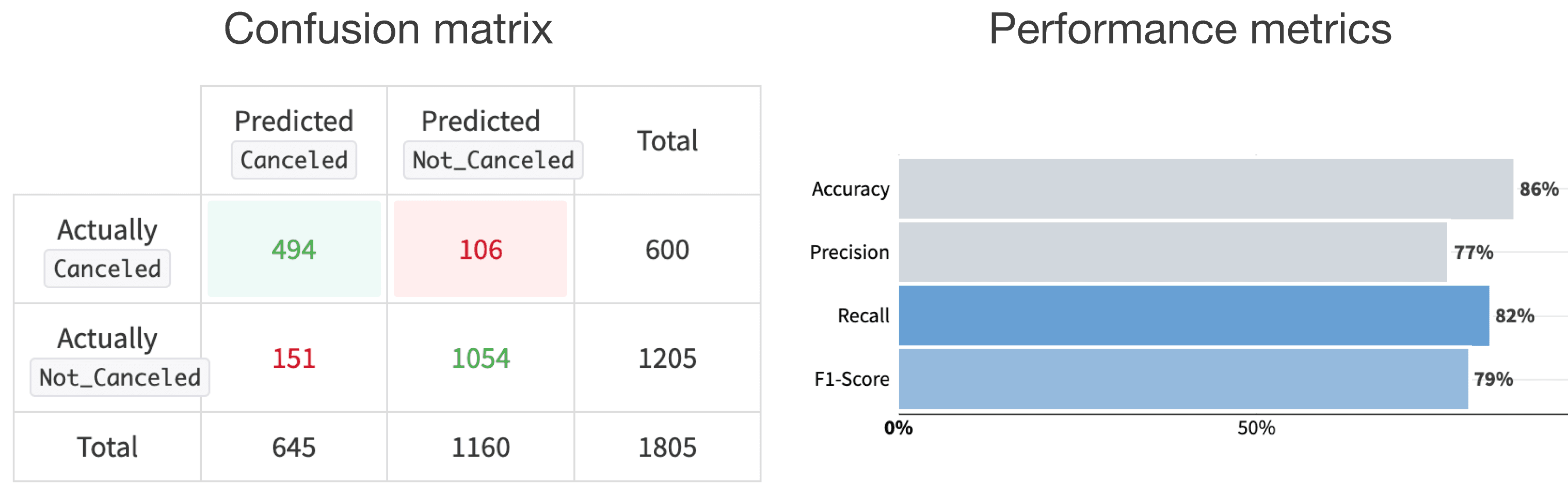

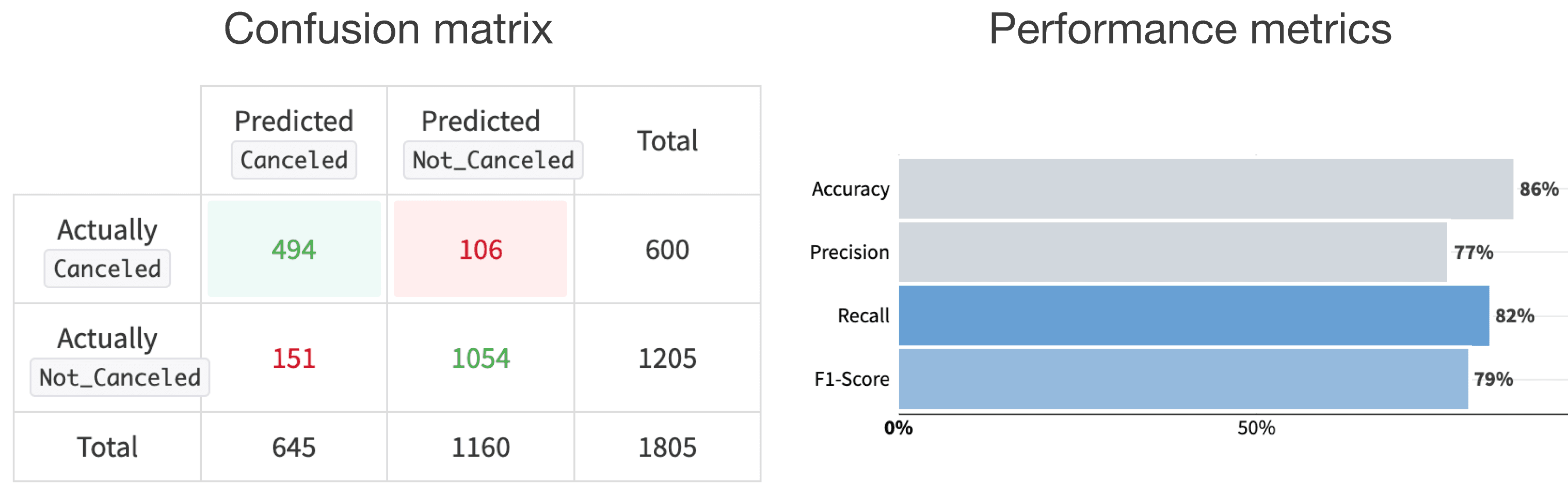

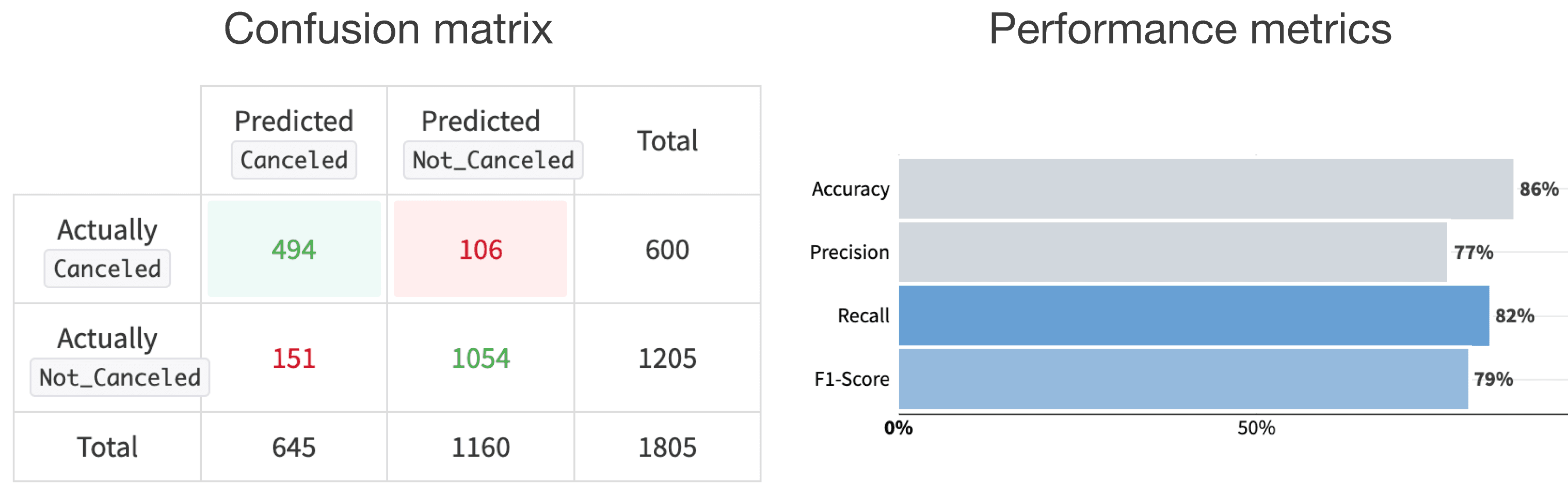

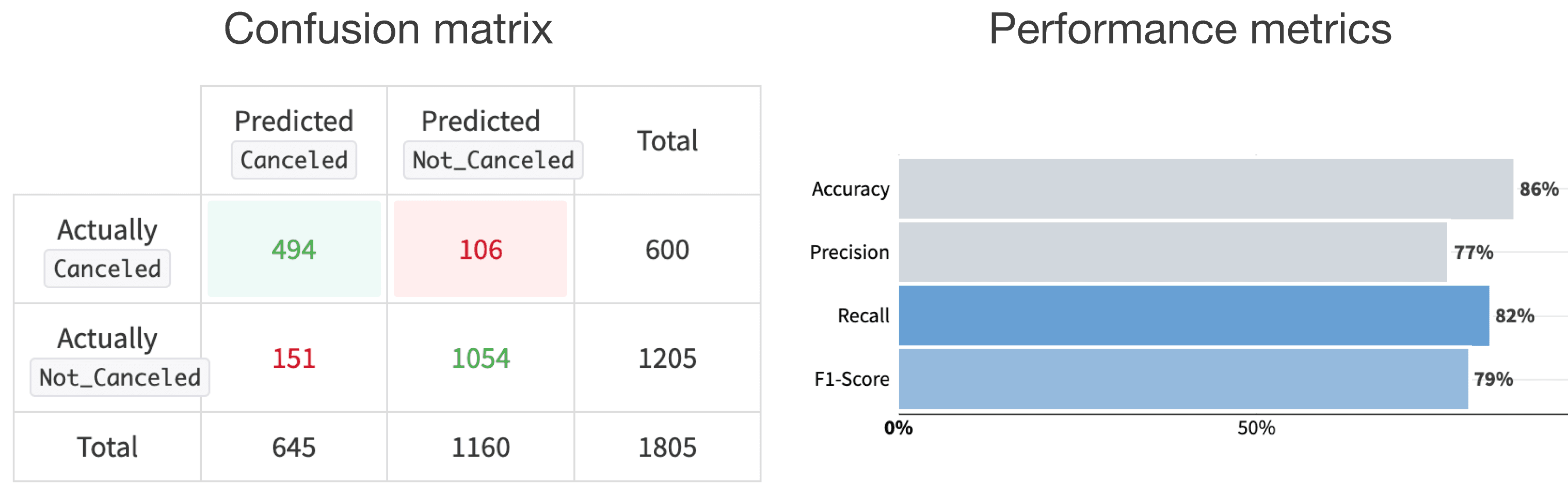

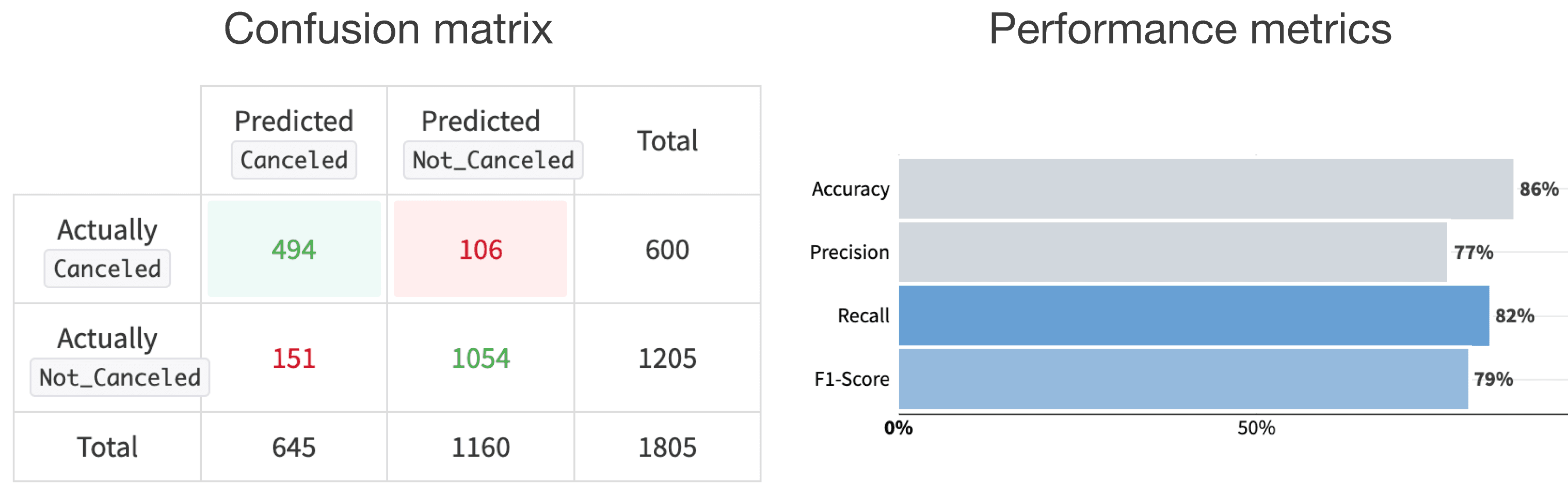

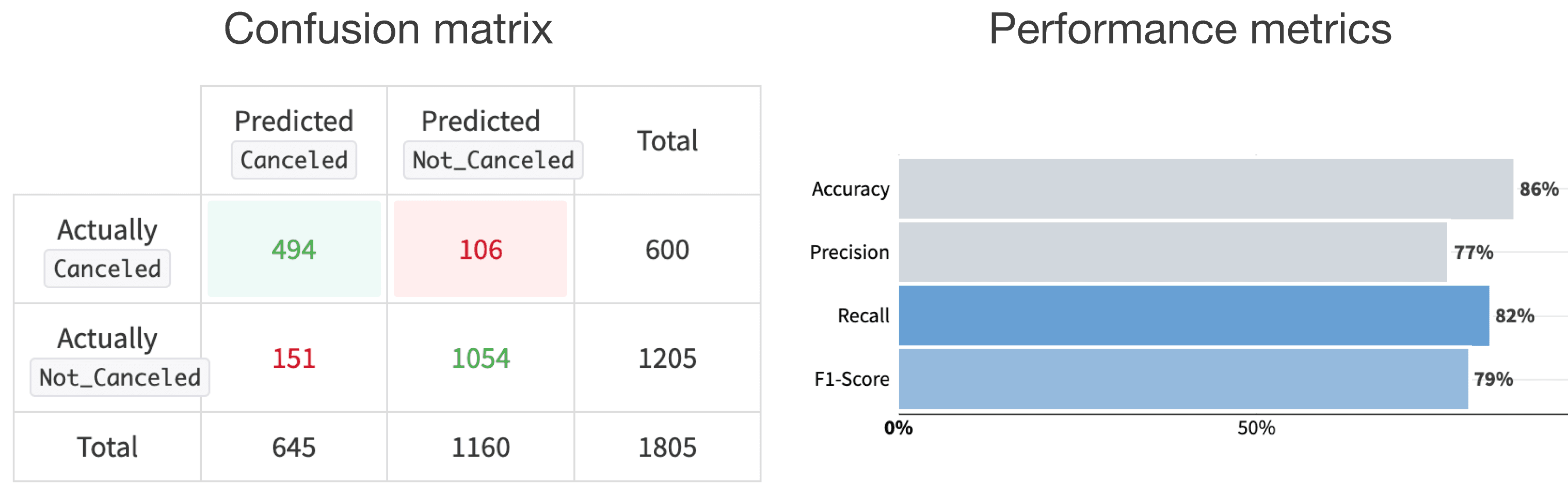

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations. Correctly identified 494 cancellations, missed 106.

Performance metrics: Precision decreased by 5%, while recall increased by 4% from the baseline Random Forest model.

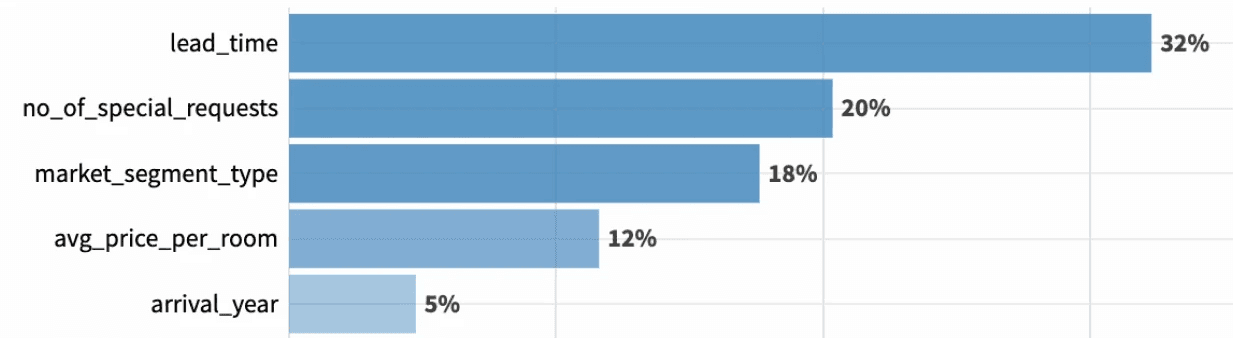

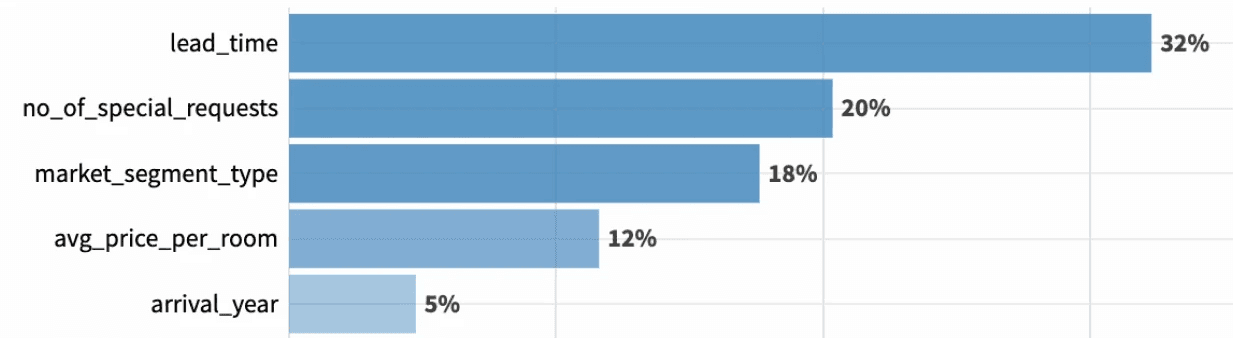

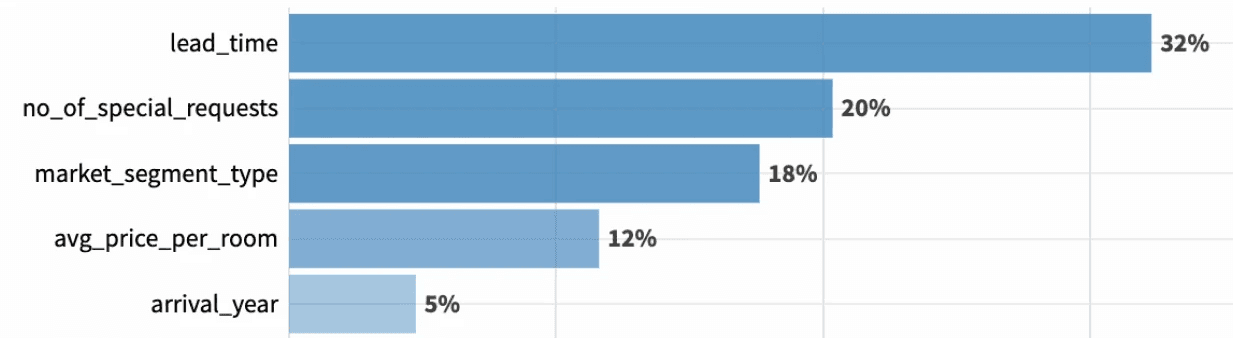

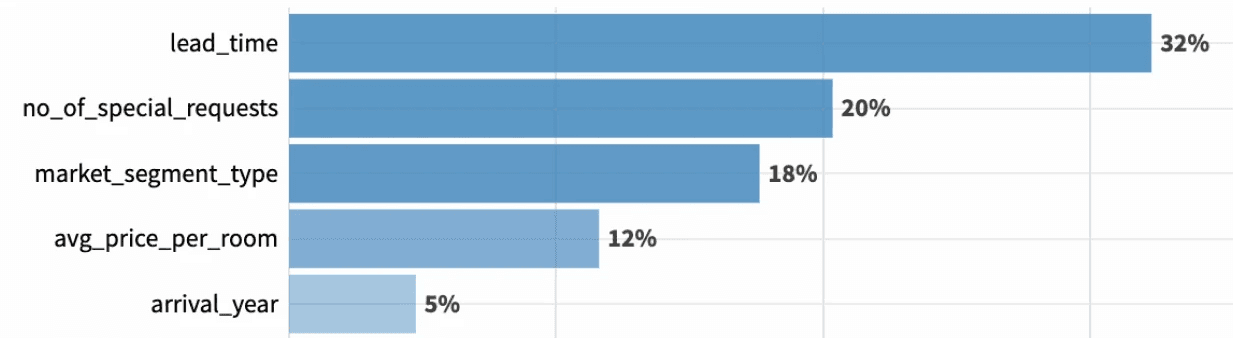

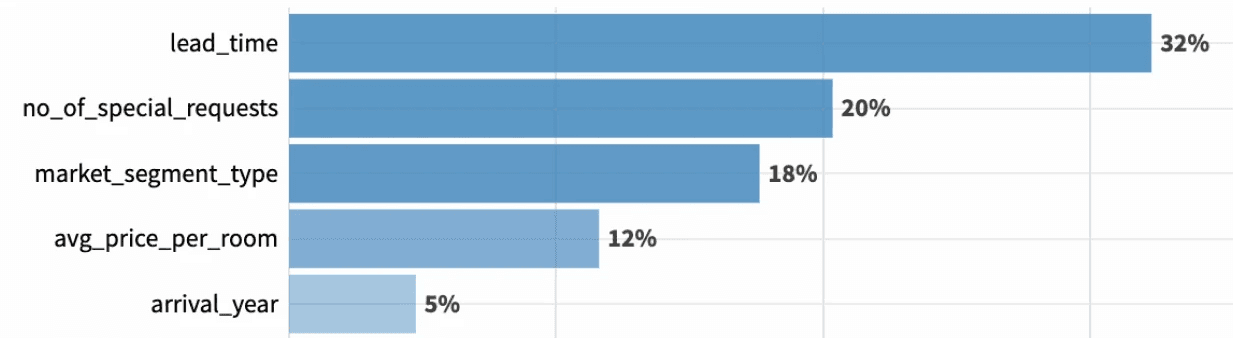

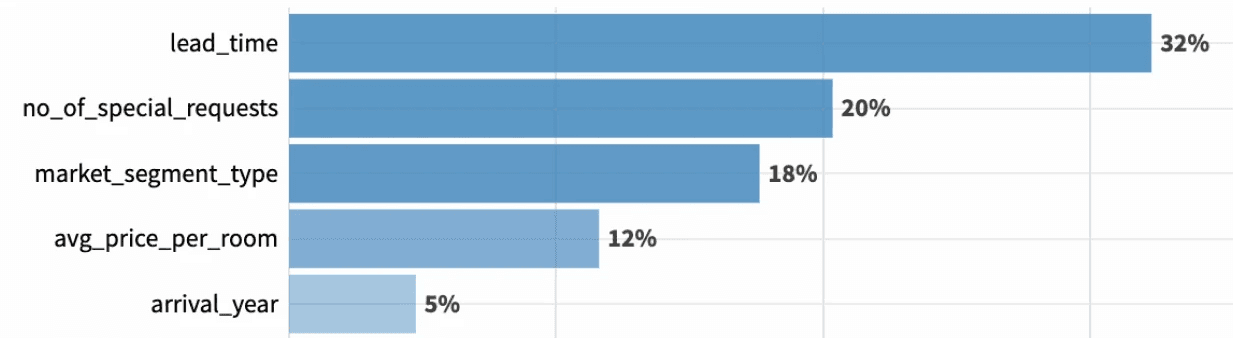

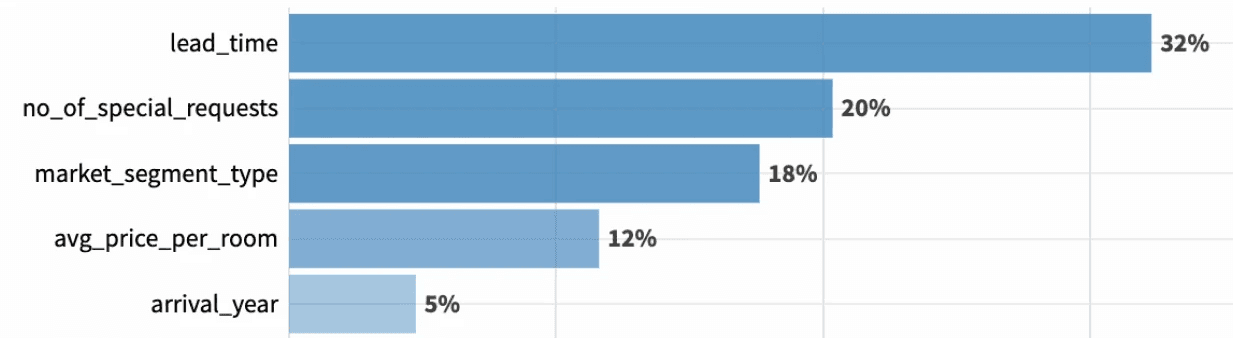

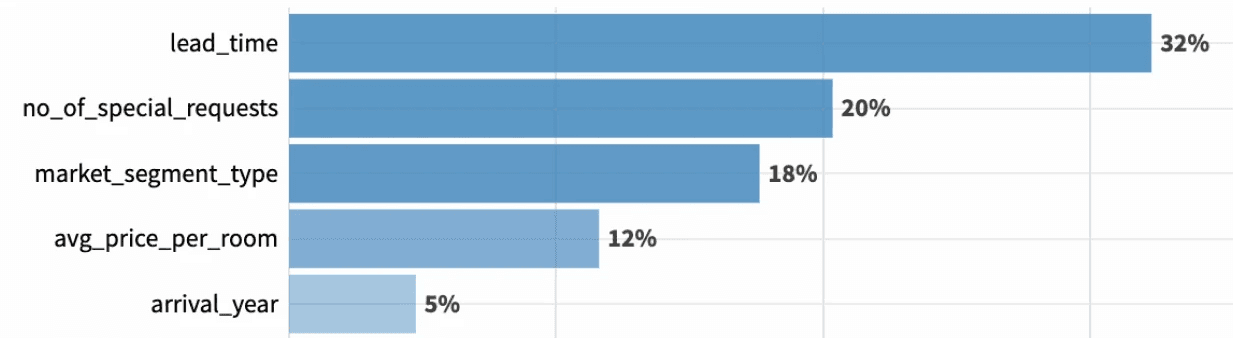

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Recommendations

5

Targeted policies: Tier cancellations for short lead times, online bookings, $54-$162 rooms; incentivize special requests.

Enhance online experience: Improve booking with better data, research, and testing.

Boost retention: Prioritize loyalty for revenue over new customer acquisition.

EDA - Bivariate analysis

1

Lead time: 50% of booking cancellations occur within 3 months.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Model training

2

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations. Correctly identified 494 cancellations, missed 106.

Performance metrics: Precision decreased by 5%, while recall increased by 4% from the baseline Random Forest model.

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Recommendations

5

Targeted policies: Tier cancellations for short lead times, online bookings, $54-$162 rooms; incentivize special requests.

Enhance online experience: Improve booking with better data, research, and testing.

Boost retention: Prioritize loyalty for revenue over new customer acquisition.

EDA - Bivariate analysis

1

Lead time: 50% of booking cancellations occur within 3 months.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Model training

2

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations. Correctly identified 494 cancellations, missed 106.

Performance metrics: Precision decreased by 5%, while recall increased by 4% from the baseline Random Forest model.

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Recommendations

5

Targeted policies: Tier cancellations for short lead times, online bookings, $54-$162 rooms; incentivize special requests.

Enhance online experience: Improve booking with better data, research, and testing.

Boost retention: Prioritize loyalty for revenue over new customer acquisition.

EDA - Bivariate analysis

1

Lead time: 50% of booking cancellations occur within 3 months.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Model training

2

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations. Correctly identified 494 cancellations, missed 106.

Performance metrics: Precision decreased by 5%, while recall increased by 4% from the baseline Random Forest model.

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Recommendations

5

Targeted policies: Tier cancellations for short lead times, online bookings, $54-$162 rooms; incentivize special requests.

Enhance online experience: Improve booking with better data, research, and testing.

Boost retention: Prioritize loyalty for revenue over new customer acquisition.

EDA - Bivariate analysis

1

Lead time: 50% of booking cancellations occur within 3 months.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Model training

2

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations. Correctly identified 494 cancellations, missed 106.

Performance metrics: Precision decreased by 5%, while recall increased by 4% from the baseline Random Forest model.

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Recommendations

5

Targeted policies: Tier cancellations for short lead times, online bookings, $54-$162 rooms; incentivize special requests.

Enhance online experience: Improve booking with better data, research, and testing.

Boost retention: Prioritize loyalty for revenue over new customer acquisition.

EDA - Bivariate analysis

1

Lead time: 50% of booking cancellations occur within 3 months.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Model training

2

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations. Correctly identified 494 cancellations, missed 106.

Performance metrics: Precision decreased by 5%, while recall increased by 4% from the baseline Random Forest model.

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Recommendations

5

Targeted policies: Tier cancellations for short lead times, online bookings, $54-$162 rooms; incentivize special requests.

Enhance online experience: Improve booking with better data, research, and testing.

Boost retention: Prioritize loyalty for revenue over new customer acquisition.

EDA - Bivariate analysis

1

Lead time: 50% of booking cancellations occur within 3 months.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Model training

2

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations. Correctly identified 494 cancellations, missed 106.

Performance metrics: Precision decreased by 5%, while recall increased by 4% from the baseline Random Forest model.

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Recommendations

5

Targeted policies: Tier cancellations for short lead times, online bookings, $54-$162 rooms; incentivize special requests.

Enhance online experience: Improve booking with better data, research, and testing.

Boost retention: Prioritize loyalty for revenue over new customer acquisition.

EDA - Bivariate analysis

1

Lead time: 50% of booking cancellations occur within 3 months.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Model training

2

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations. Correctly identified 494 cancellations, missed 106.

Performance metrics: Precision decreased by 5%, while recall increased by 4% from the baseline Random Forest model.

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Recommendations

5

Targeted policies: Tier cancellations for short lead times, online bookings, $54-$162 rooms; incentivize special requests.

Enhance online experience: Improve booking with better data, research, and testing.

Boost retention: Prioritize loyalty for revenue over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

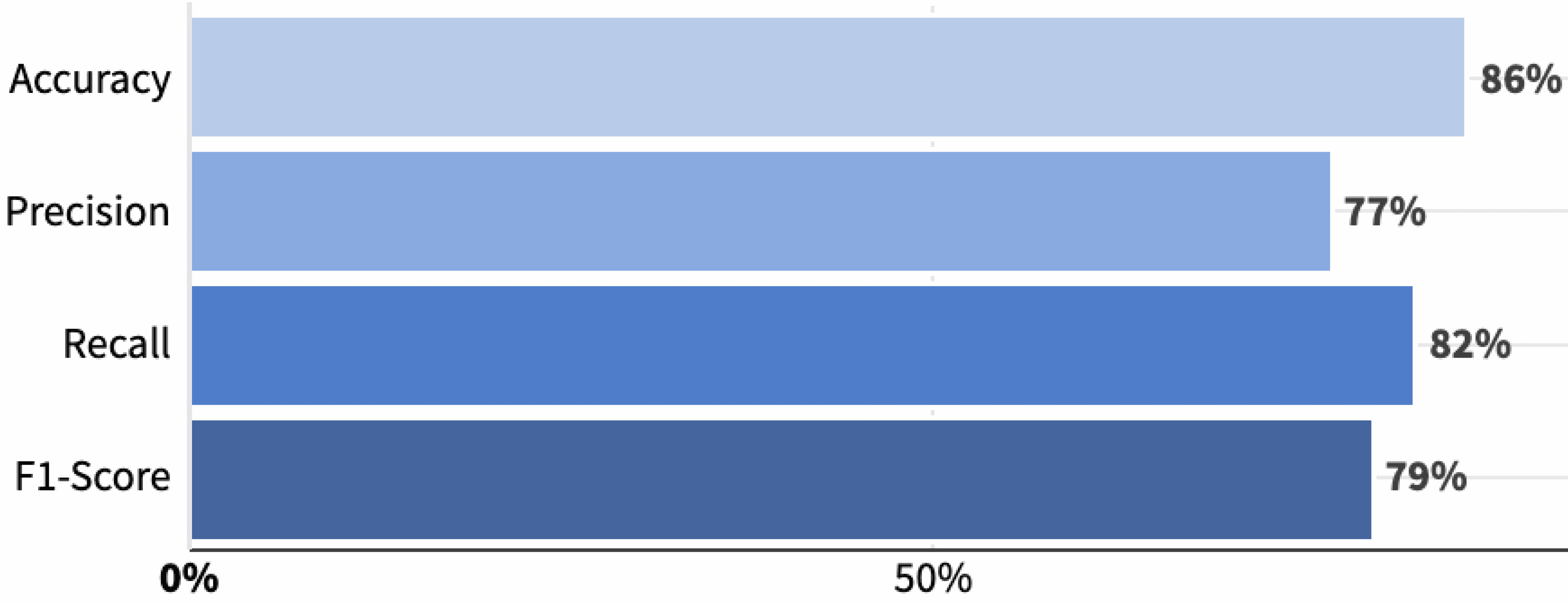

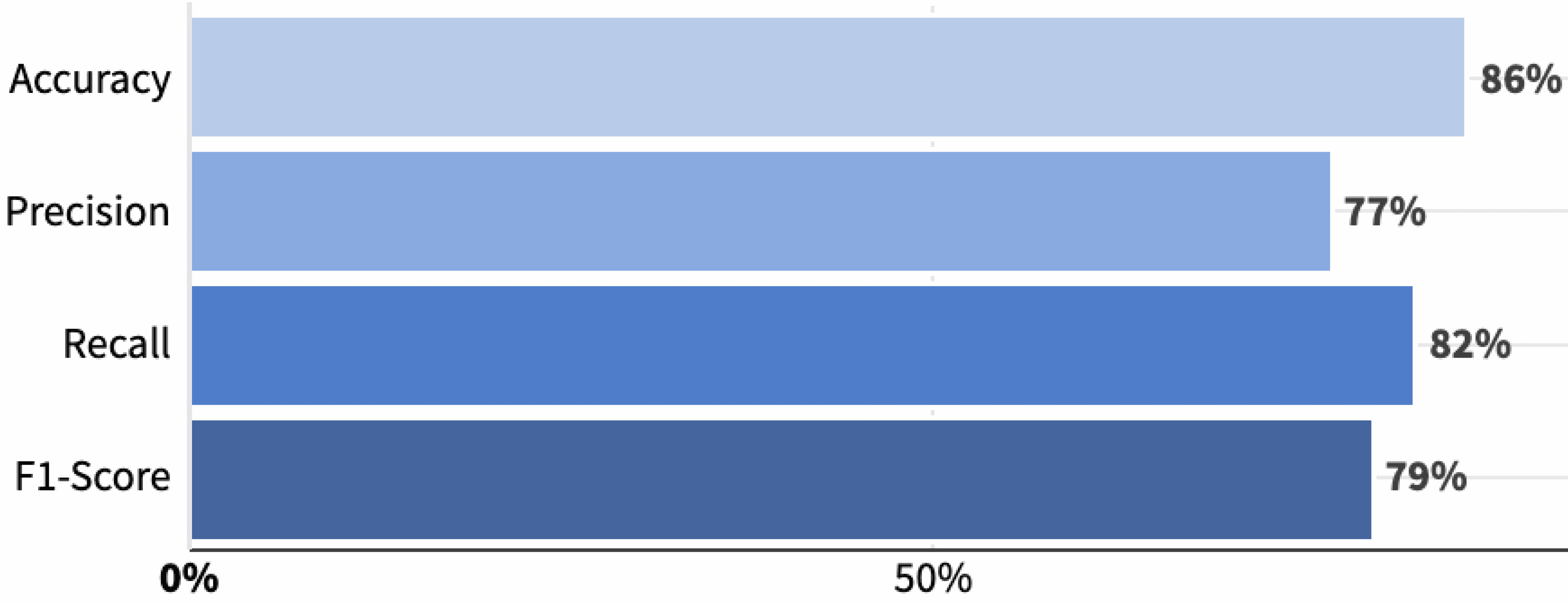

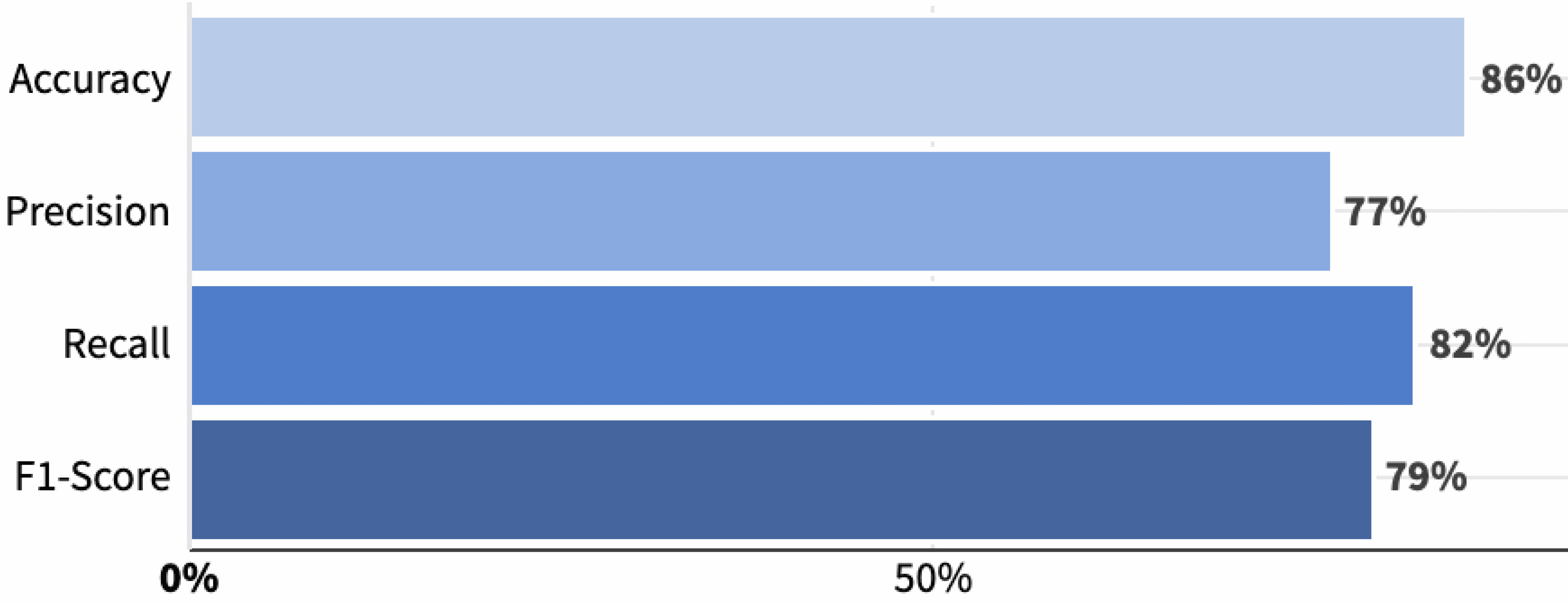

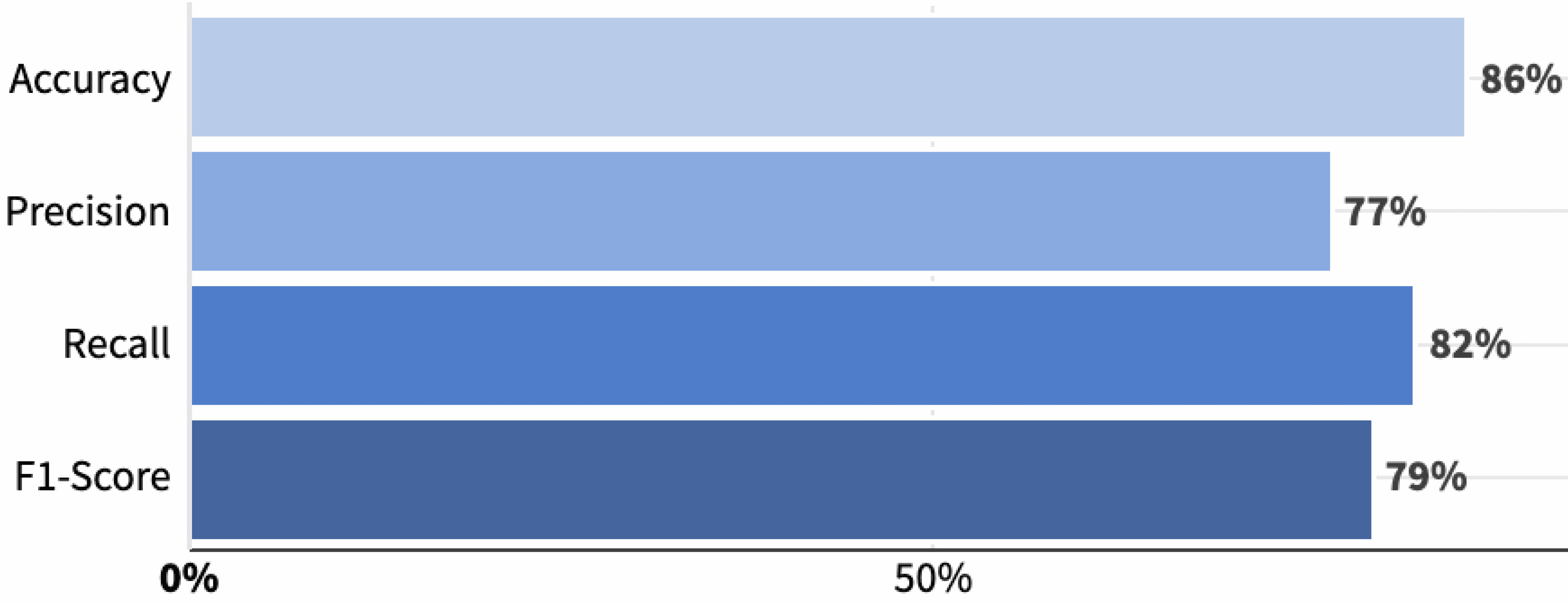

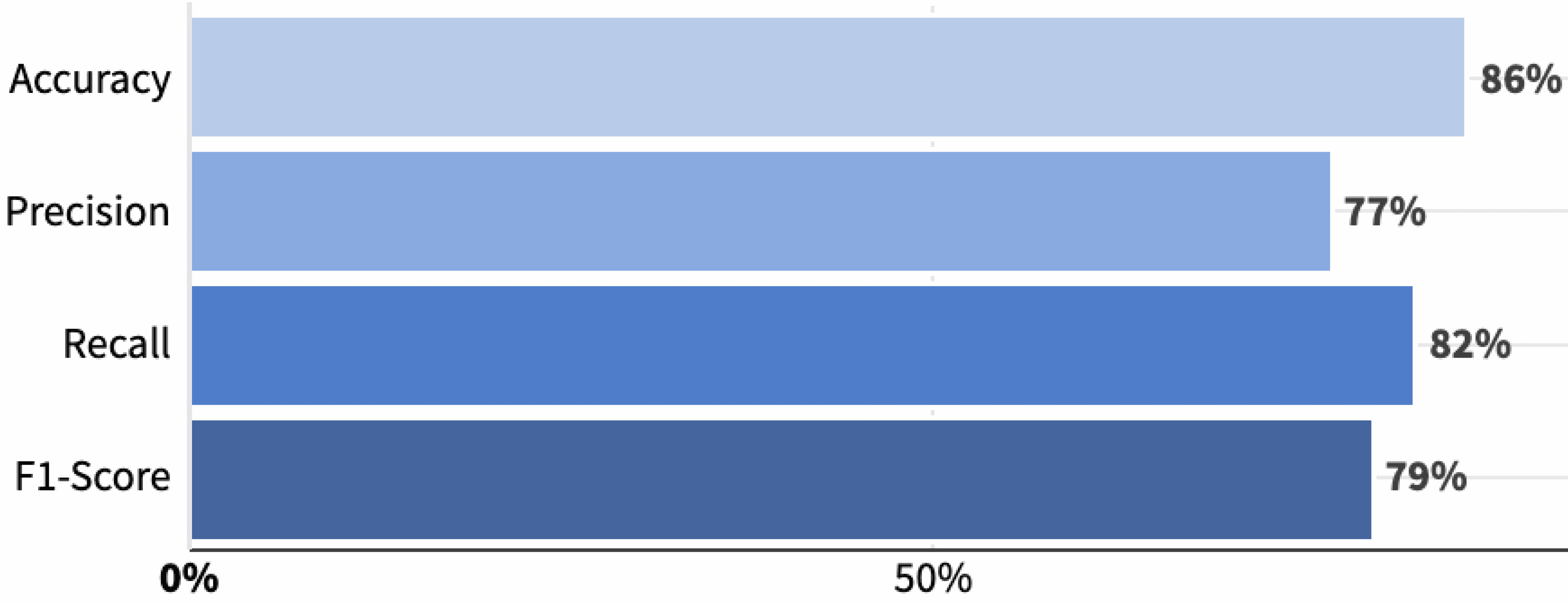

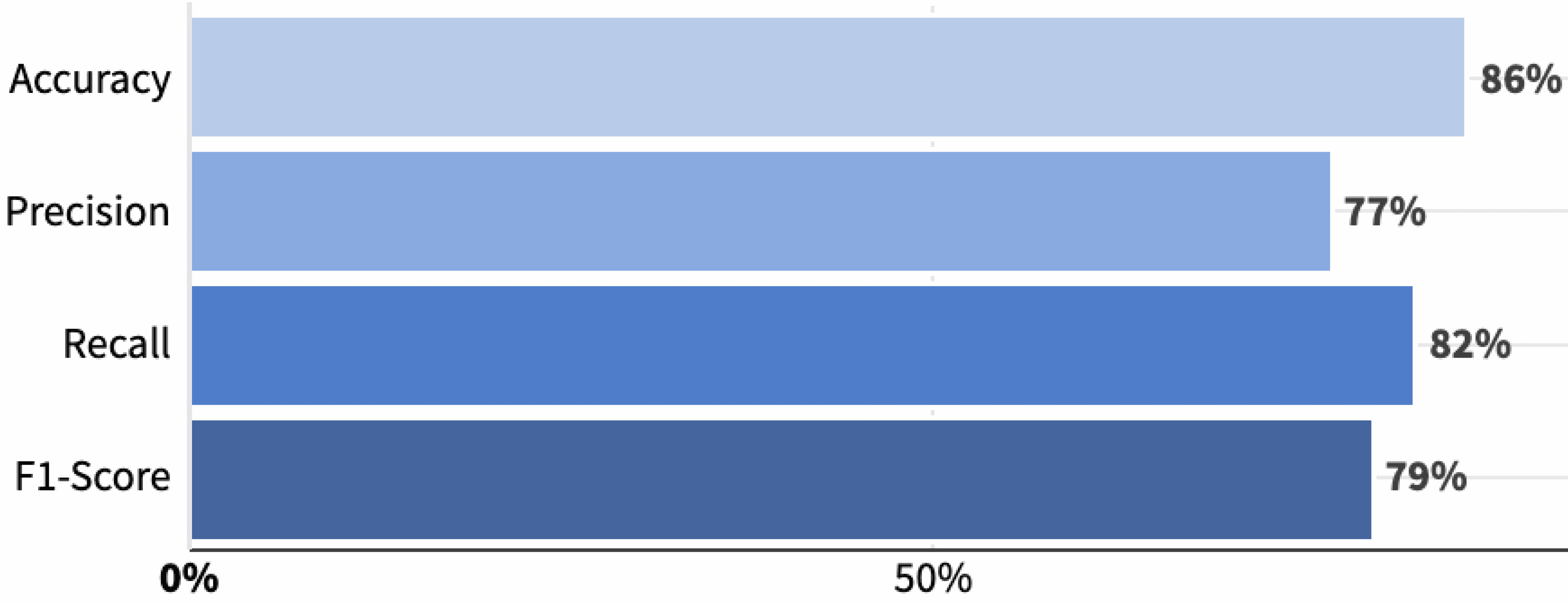

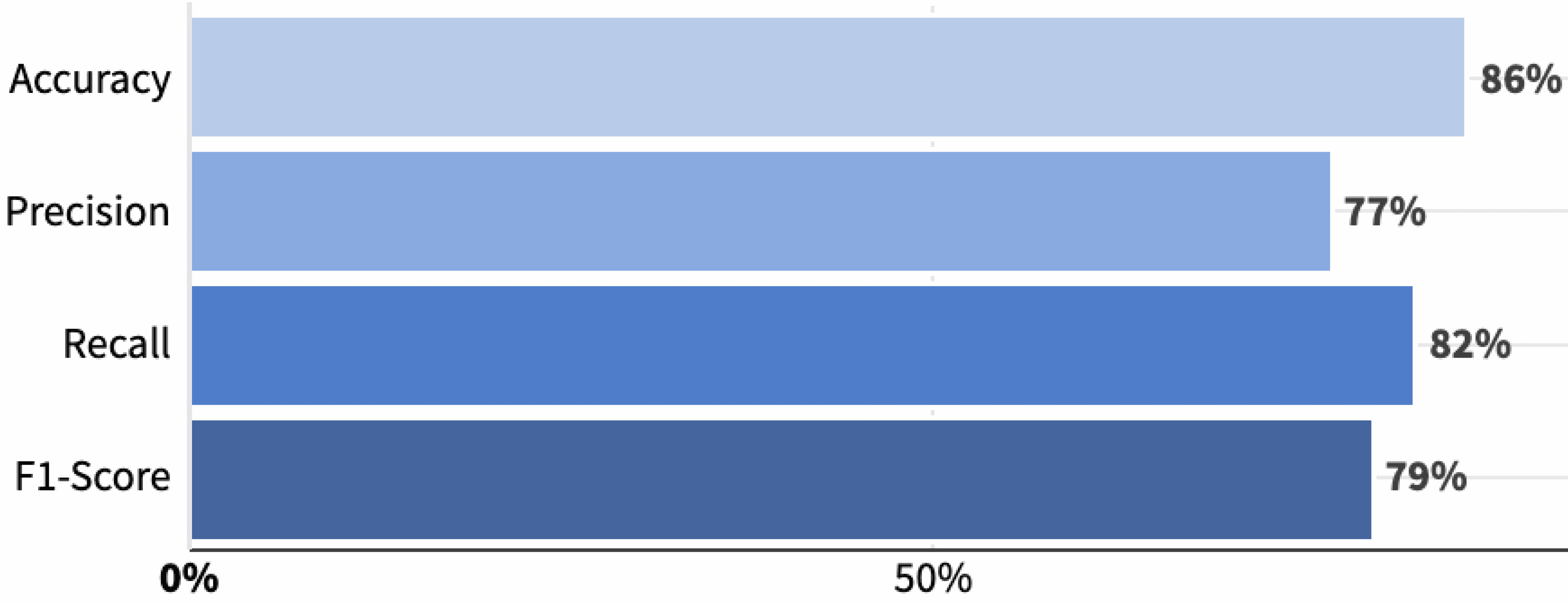

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

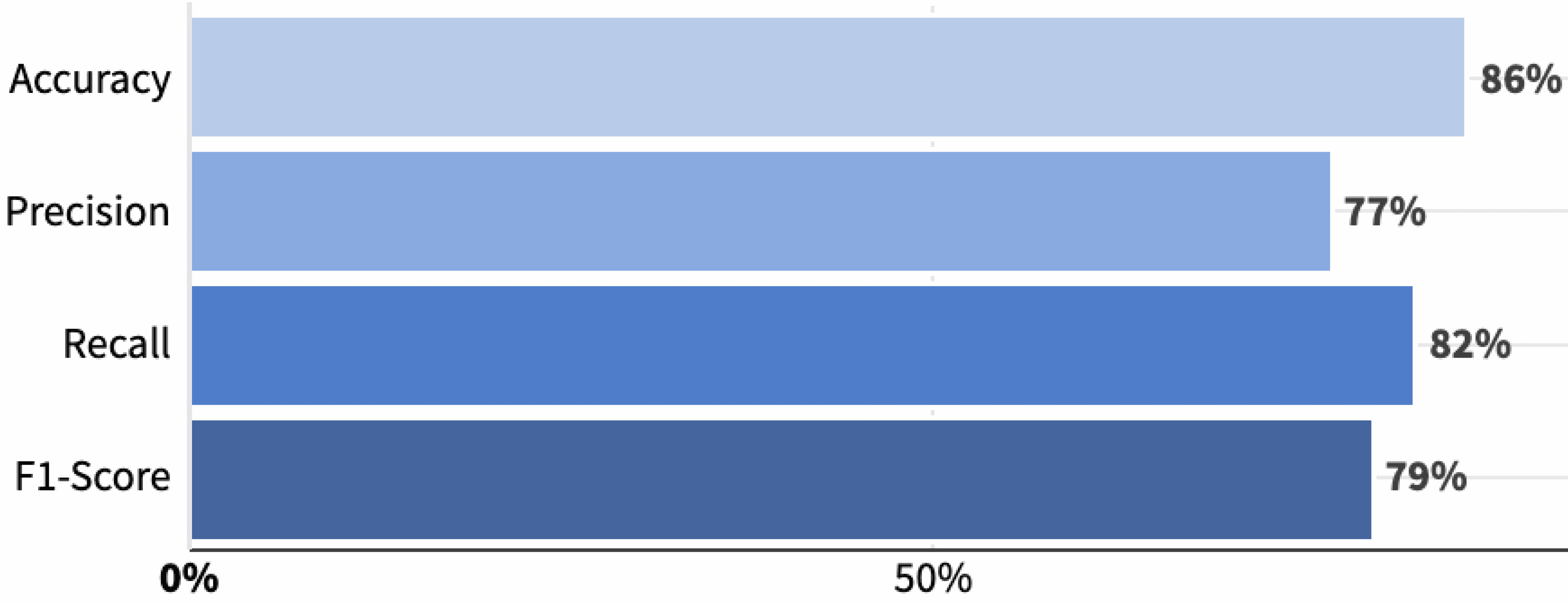

Performance metrics

Accuracy: 86%

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Accuracy: 86%

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Accuracy: 86%

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Accuracy: 86%

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Accuracy: 86%

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Accuracy: 86%

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Accuracy: 86%

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Accuracy: 86%

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Accuracy: 86%

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Accuracy: 86%

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Accuracy: 86%

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Accuracy: 86%

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

EDA - Bivariate analysis

1

Analyze key trends and correlations in booking data.

Lead time: 50% of booking cancellations occur within 3 months, increasing closer to the arrival date.

Special requests: 71% of cancellations had no special requests, suggesting lower investment among guests who book these rooms.

Screenshot from my Dataiku project

Model training

2

Screenshot from my Dataiku project

Model evaluation

3

Model selection: Pruned Random Forest model selected for optimal recall, minimizing missed booking cancellations to protect revenue and opportunity costs.

Confusion matrix: Correctly identified 494 cancellations, missed 106.

Performance metrics

Precision: 77% (-5% from baseline)

Recall: 82% (+4% from baseline)

F1-score: 79%

Screenshot from my Dataiku project

Top predictors

4

Lead time (32%): Short booking windows increase cancellations.

# Special requests (20%): Fewer requests mean higher cancellations.

Market segment (18%): Online bookings cancel more often.

Avg price per room (12%): Cancellations cluster in the $54-$162 range.

Screenshot from my Dataiku project

Recommendations

5

Targeted cancellation policies

Apply tiered policies for short lead times, online bookings, and $54-$162 rooms; offer incentives for special requests.

Optimize online experience

Strengthen data collection, research, and testing to enhance booking and cancellation processes.

Focus on retention

Drive revenue by prioritizing repeat guest loyalty over new customer acquisition.

Used ML models to predict hotel booking cancellations at INN Hotels, addressing financial and operational challenges from hotel booking cancellations by identifying key predictive factors to minimize revenue loss and optimize operations.

Used ML models to predict hotel booking cancellations at INN Hotels, addressing financial and operational challenges from hotel booking cancellations by identifying key predictive factors to minimize revenue loss and optimize operations.

Used ML models to predict hotel booking cancellations at INN Hotels, addressing financial and operational challenges from hotel booking cancellations by identifying key predictive factors to minimize revenue loss and optimize operations.

Used ML models to predict hotel booking cancellations at INN Hotels, addressing financial and operational challenges from hotel booking cancellations by identifying key predictive factors to minimize revenue loss and optimize operations.

Goals

Goals

Goals

Minimize revenue loss

Minimize revenue loss

Minimize revenue loss

Reduce financial impact from high cancellation rates.

Reduce financial impact from high cancellation rates.

Reduce financial impact from high cancellation rates.

Optimize operations

Optimize operations

Optimize operations

Improve resource allocation and staffing efficiency.

Improve resource allocation and staffing efficiency.

Improve resource allocation and staffing efficiency.

Problems

Problems

Problems

Revenue loss from cancellations

Revenue loss from cancellations

Revenue loss from cancellations

High cancellation rates at INN Hotels lead to significant financial and operational challenges.

High cancellation rates at INN Hotels lead to significant financial and operational challenges.

High cancellation rates at INN Hotels lead to significant financial and operational challenges.

Operational disruptions

Operational disruptions

Operational disruptions

Unpredictable cancellations disrupt resource allocation and staffing, affecting service quality.

Unpredictable cancellations disrupt resource allocation and staffing, affecting service quality.

Recommends personalized care plans factoring in medical history and lifestyle.

Goals

Minimize revenue loss

Reduce financial impact from high cancellation rates.

Optimize operations

Improve resource allocation and staffing efficiency.

Problems

Revenue loss from booking cancellations

High cancellation rates at INN Hotels lead to significant financial and operational challenges.

Operational disruptions

Unpredictable cancellations disrupt resource allocation and staffing, affecting service quality.

Highlights

Automated message drafting

AI instantly adjusts schedules based on real-time data.

Collaborative AI coordination

Multiple AI agents optimize workflow seamlessly.

Task sequencing and prioritization

Efficiently sequences tasks, prioritizes urgencies, and time-boxes work.

Key AI/ML capabilities needed

Reinforcement learning (RL)

Optimizes task sequencing using real-time data.

ML & optimization algorithms

Combines machine learning predictions with optimization techniques.

Natural language processing (NLP)

Interprets clinician inputs for schedule adjustments.

2

2

2

2

Detecting SMS spam with natural language processing

Detecting SMS spam with natural language processing

Detecting SMS spam with natural language processing

Detecting SMS spam with natural language processing

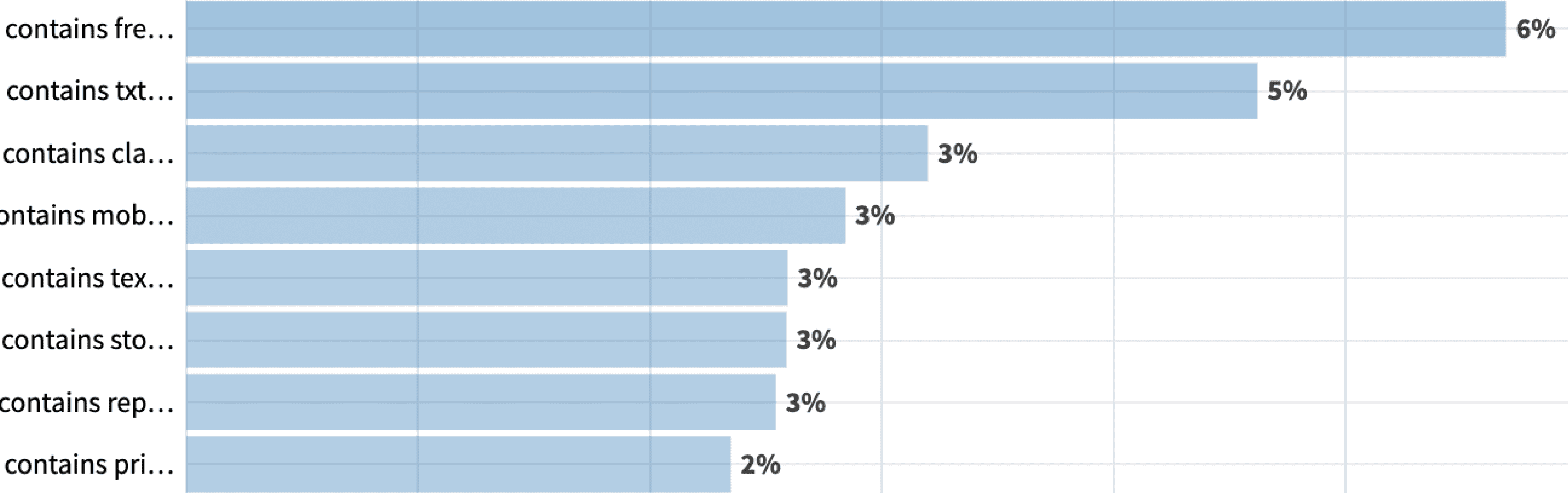

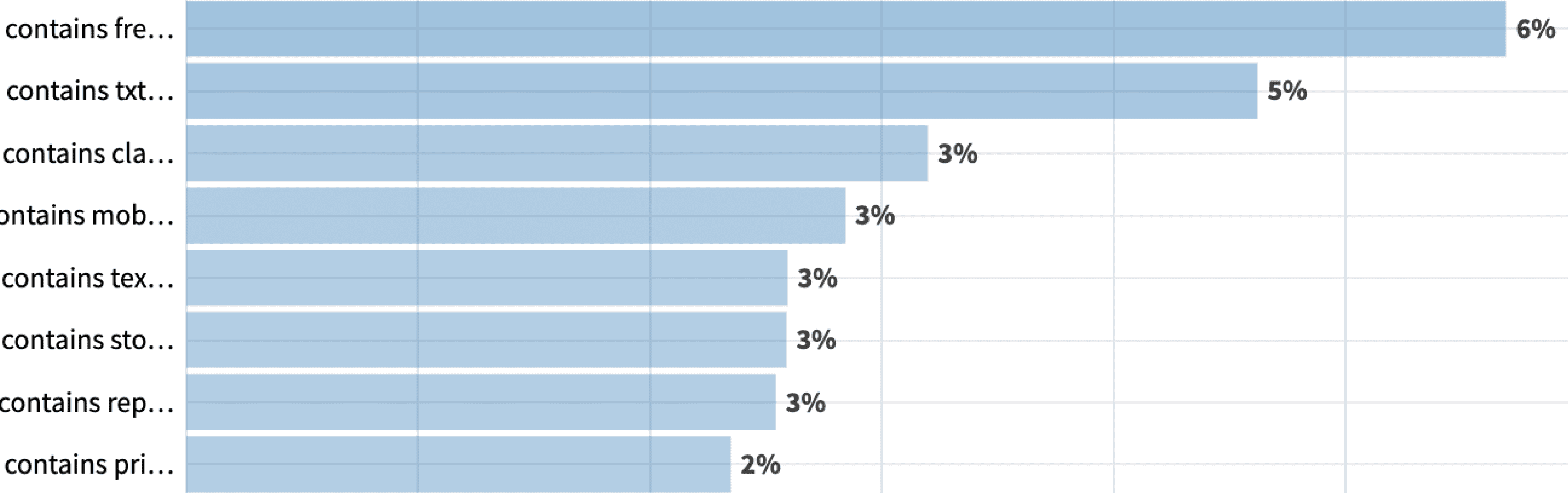

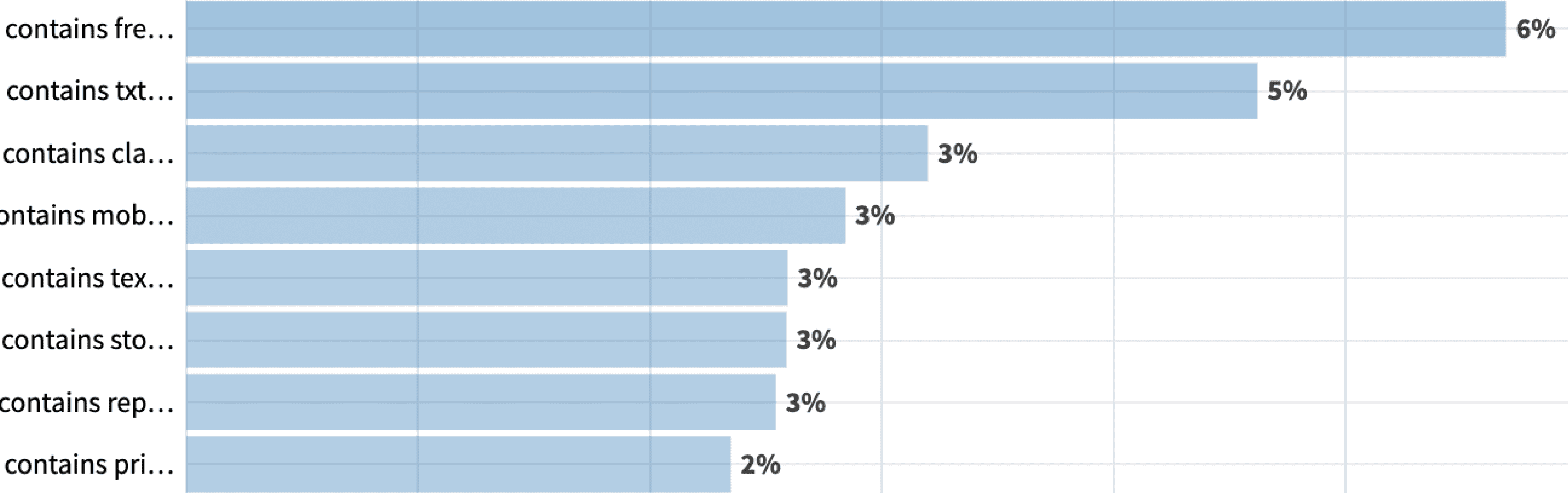

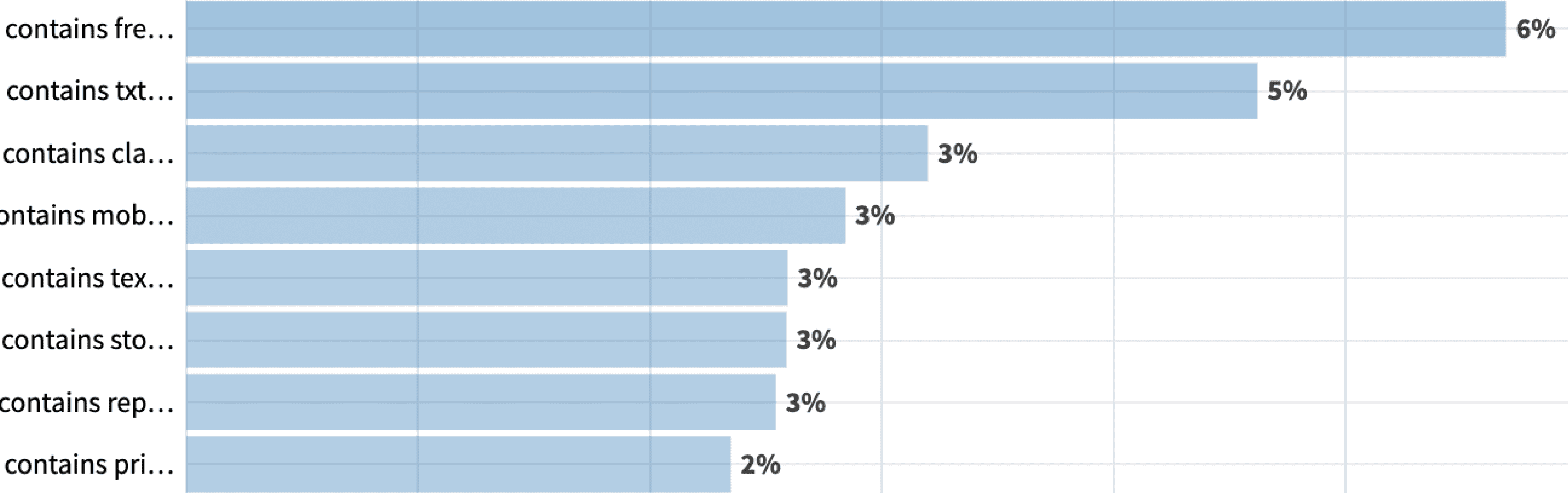

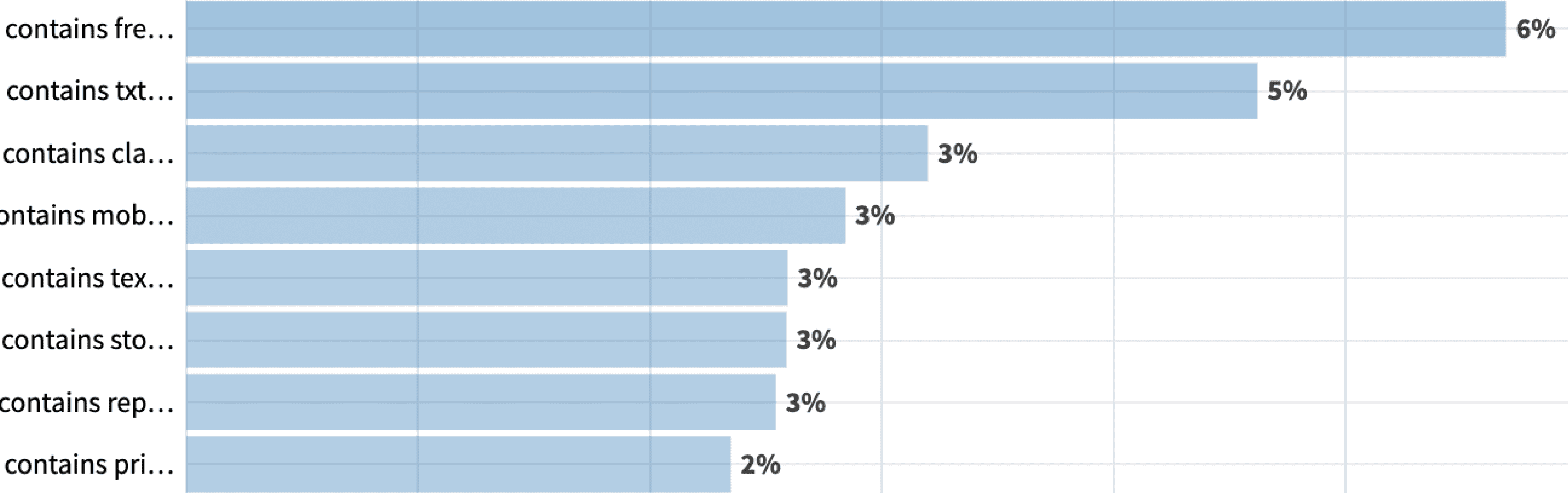

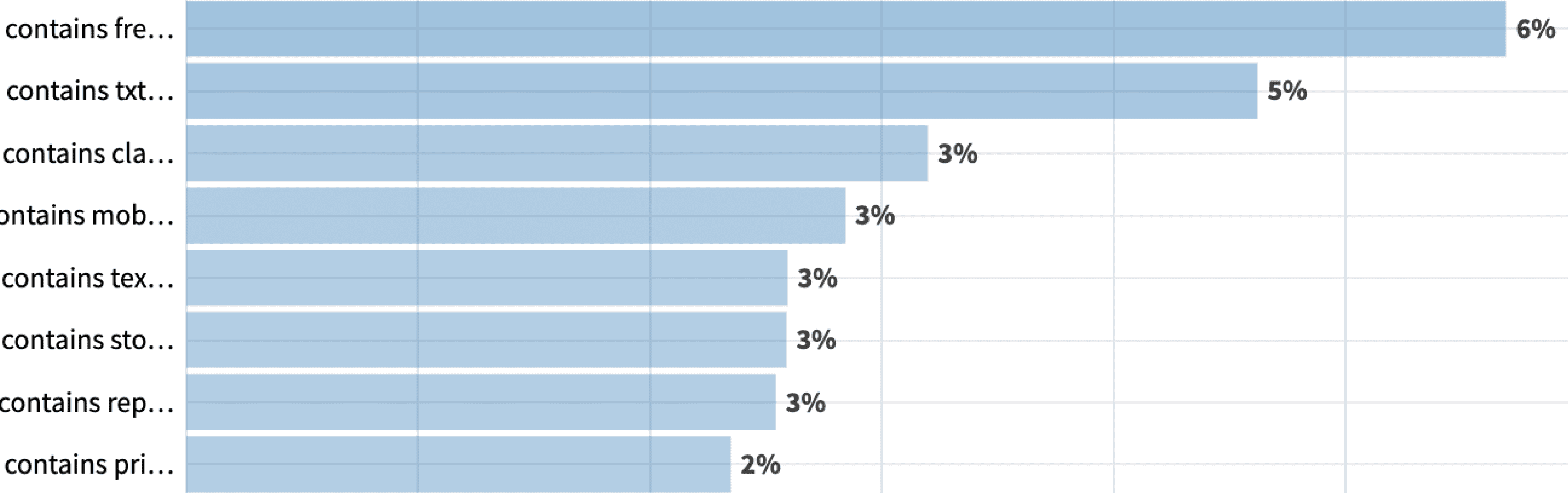

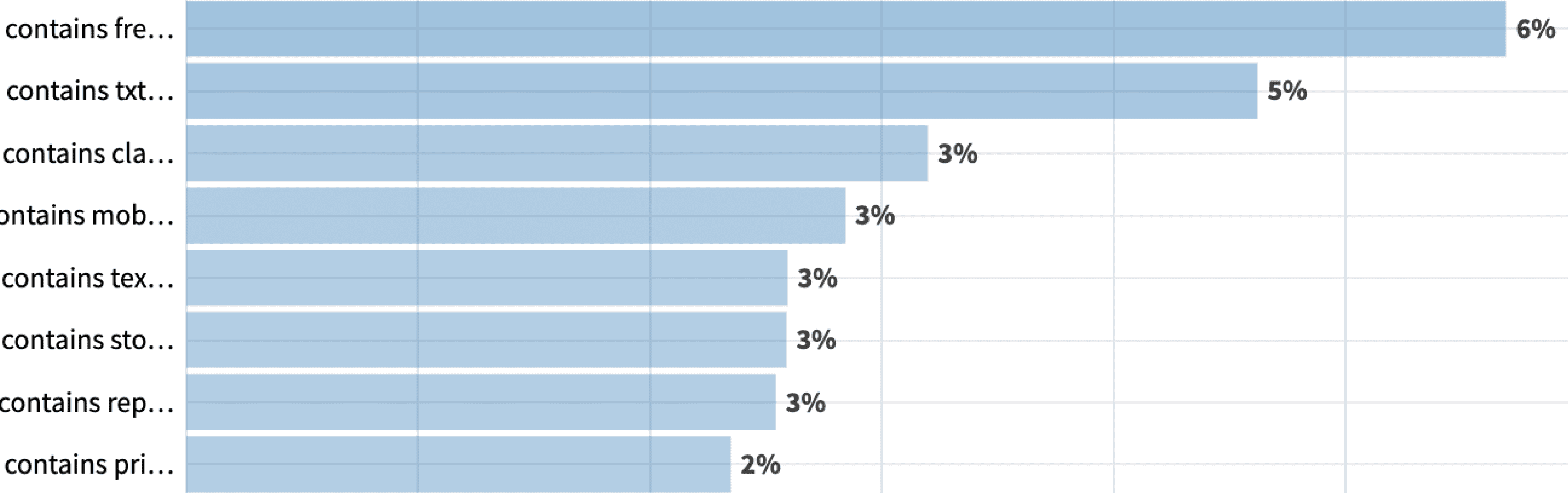

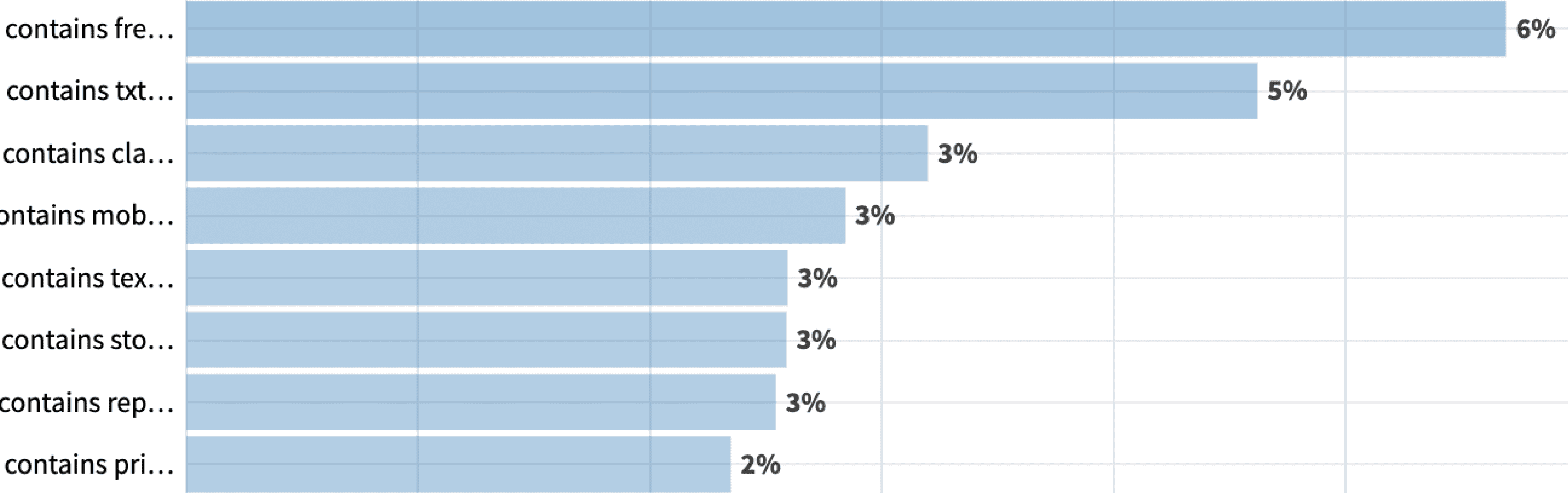

Sentiment analysis

1

Sentiment analysis scores range from 1 (very negative) to 5 (very positive).

Most SMS are neutral or negative (41.9%).

Spam messages show higher rates of extreme negativity (29%) vs. non-spam (13%), indicating reducing spam can lower negative emotions.

Screenshot from my Dataiku project

Word cloud

2

Most frequent spam words: “free,” “text,” “txt,” “ur,” “u,” “mobile,” and “claim.”

Caution: Words such as “u” and “ur” also appear in non-spam messages, reducing their reliability to accurately predict spam.

Screenshot from my Dataiku project

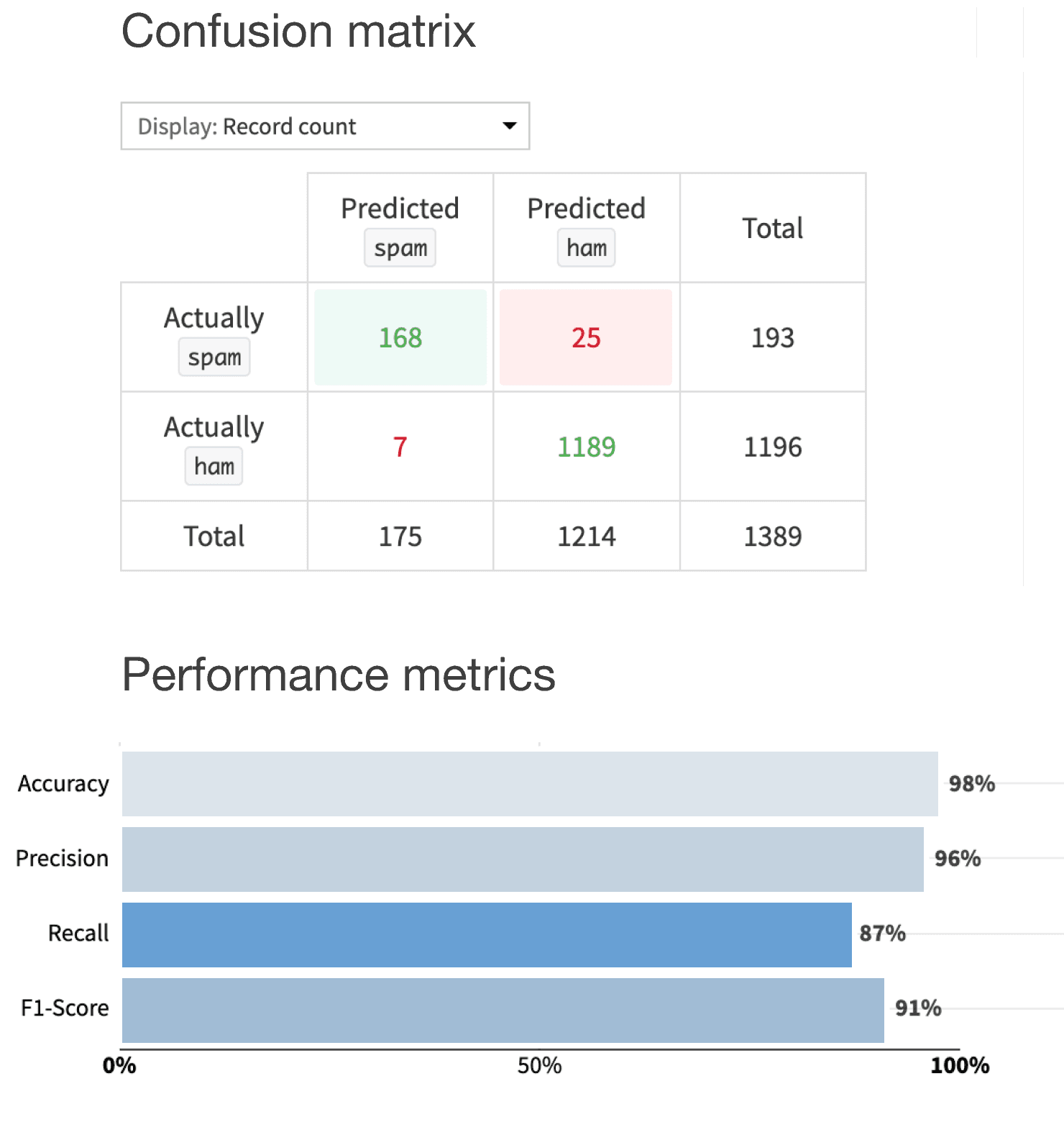

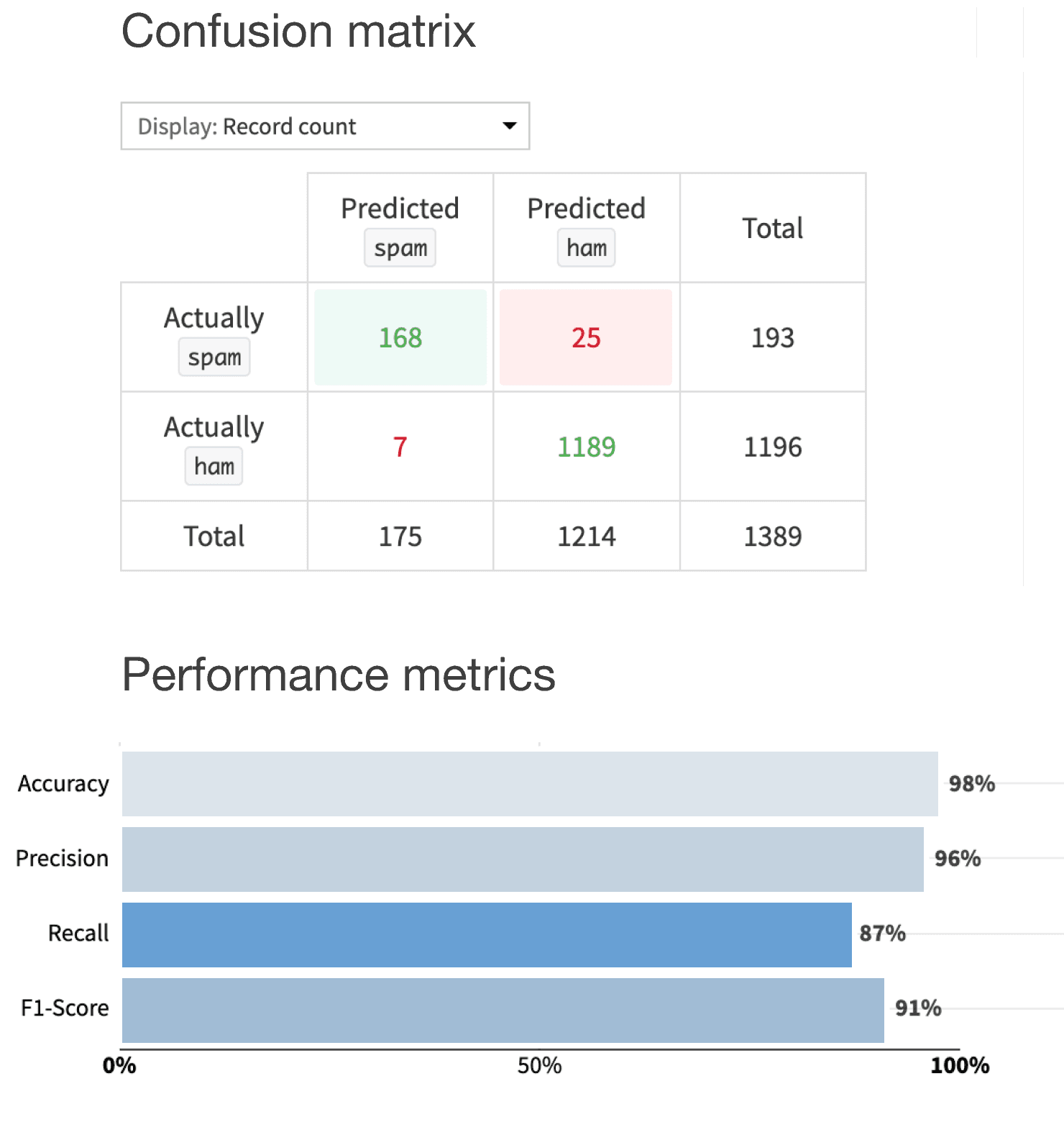

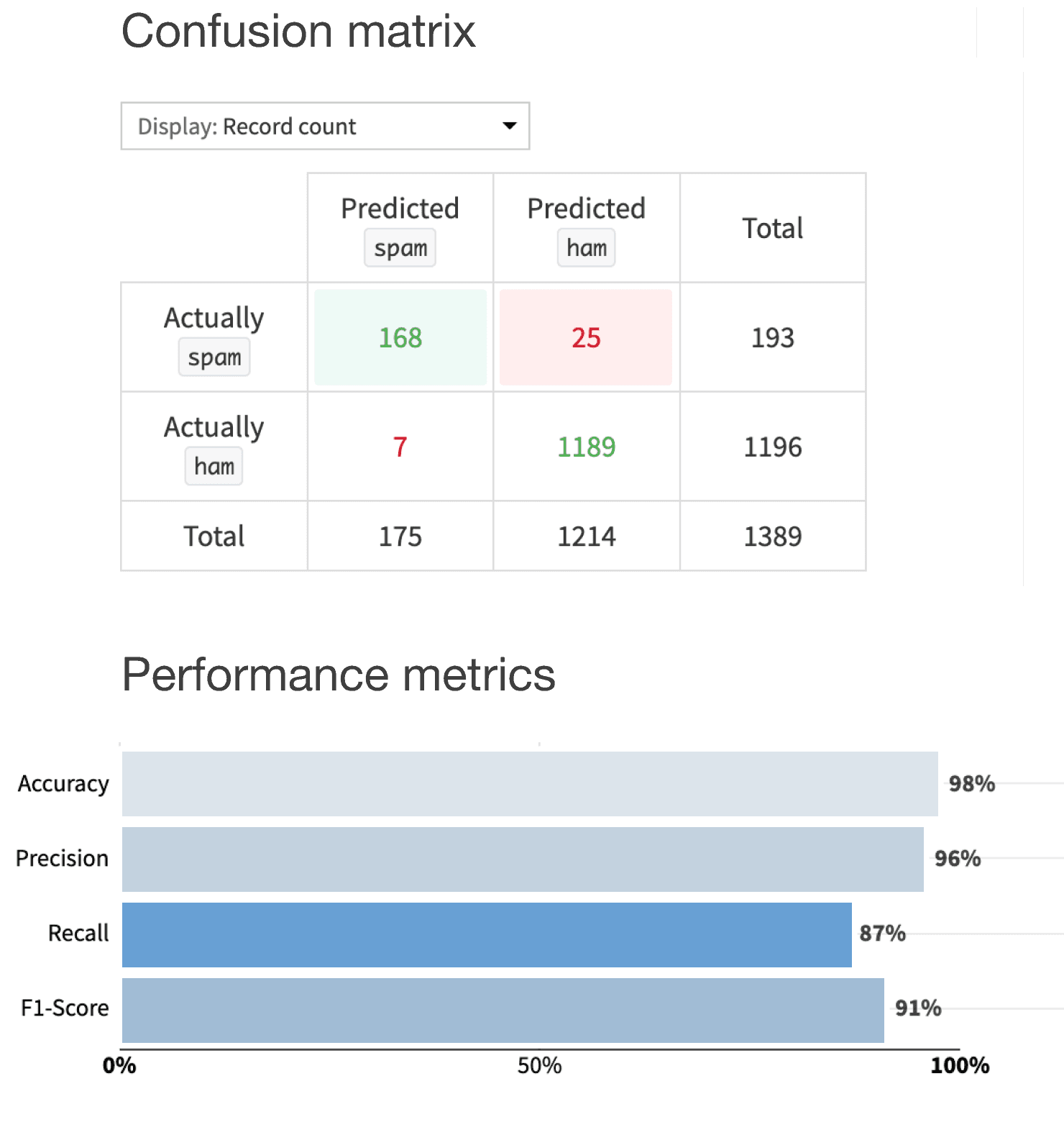

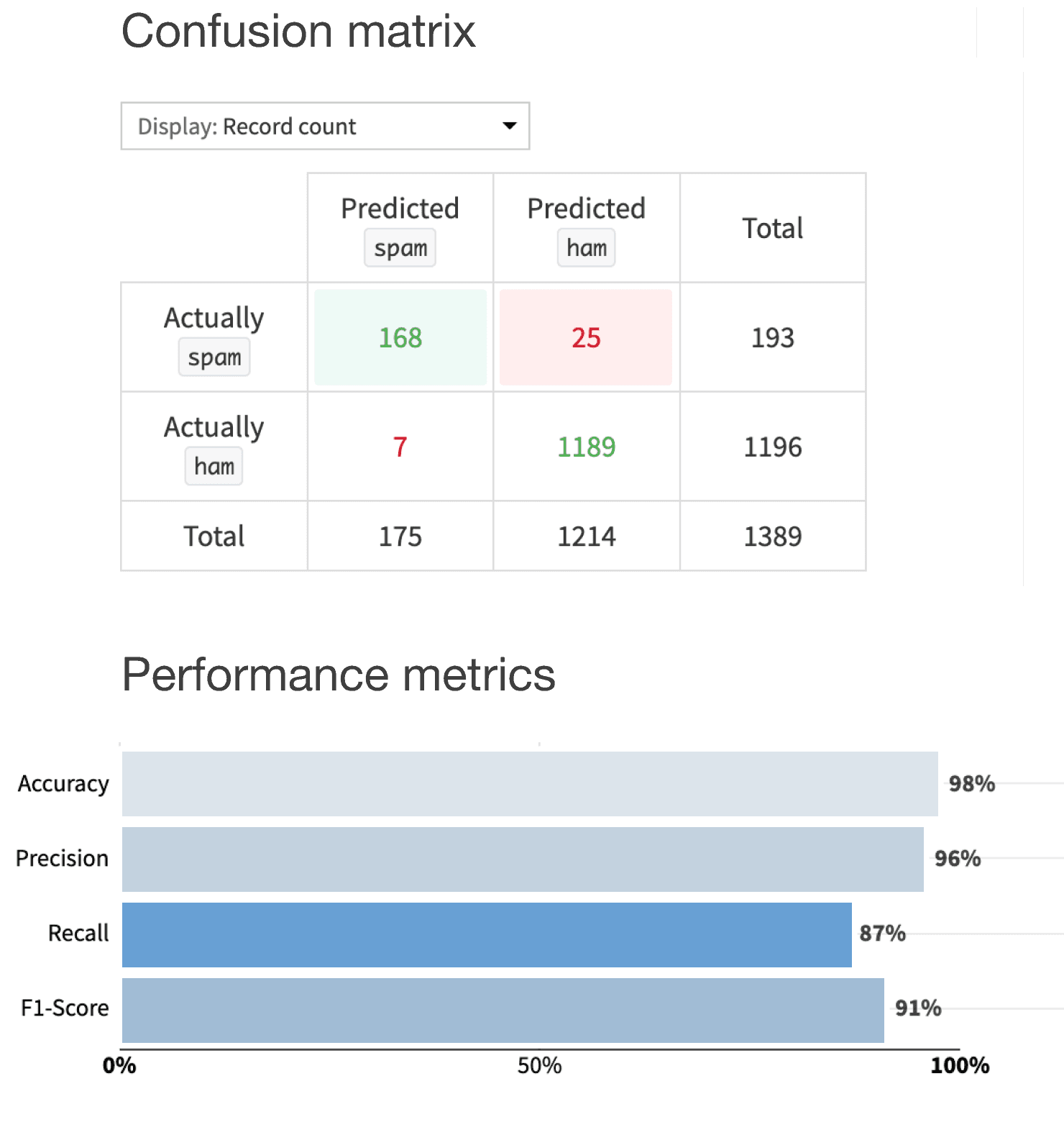

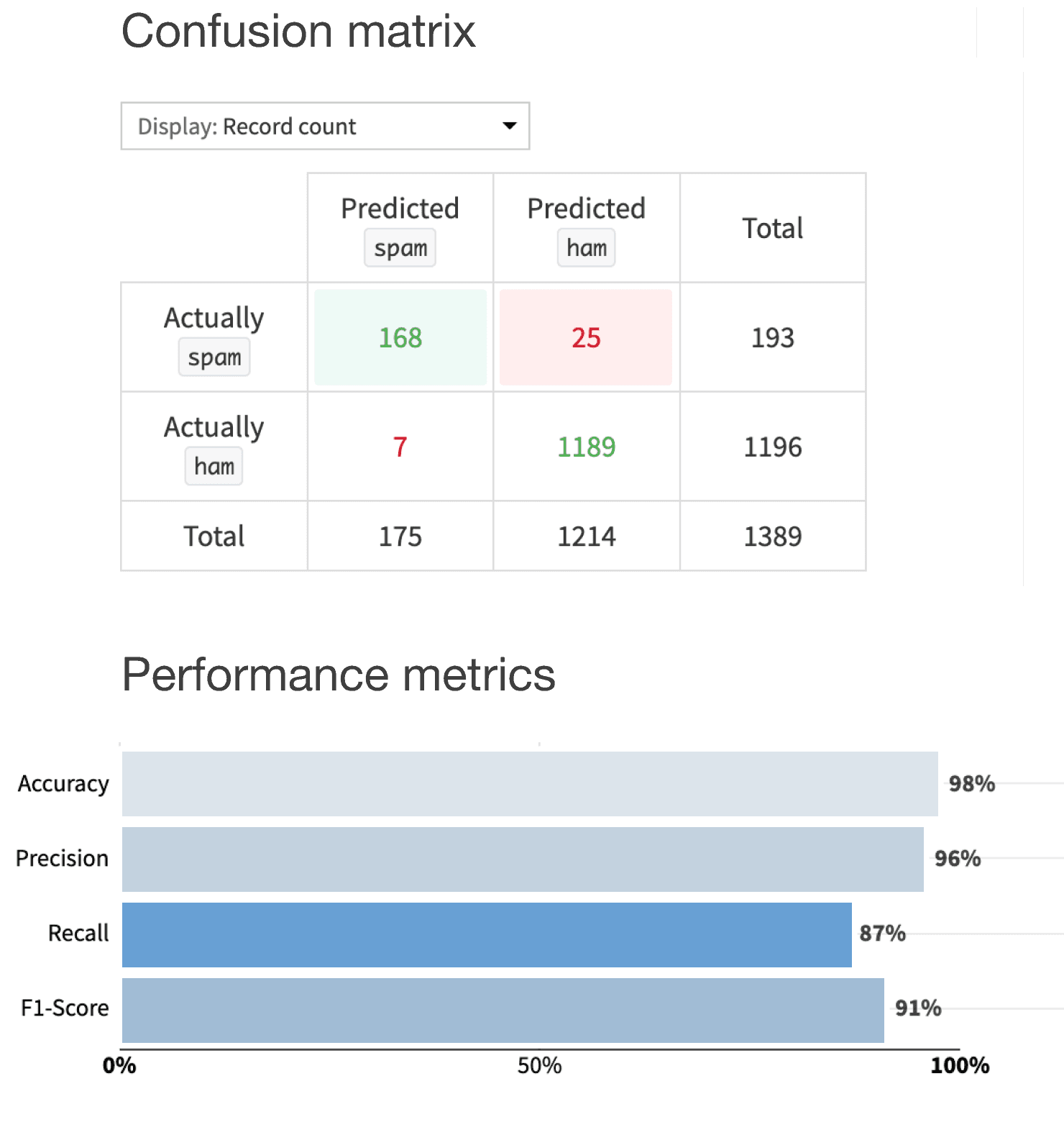

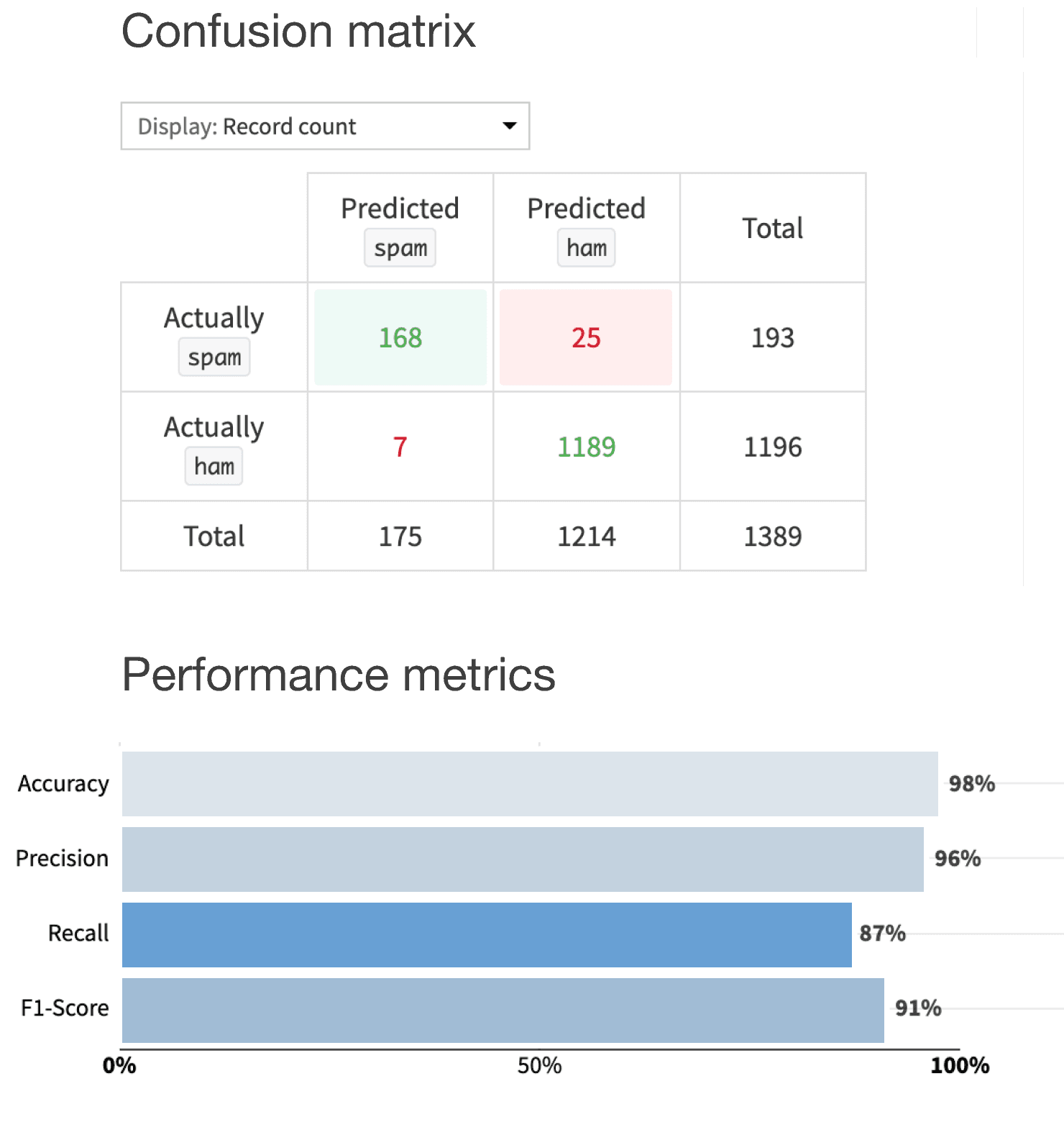

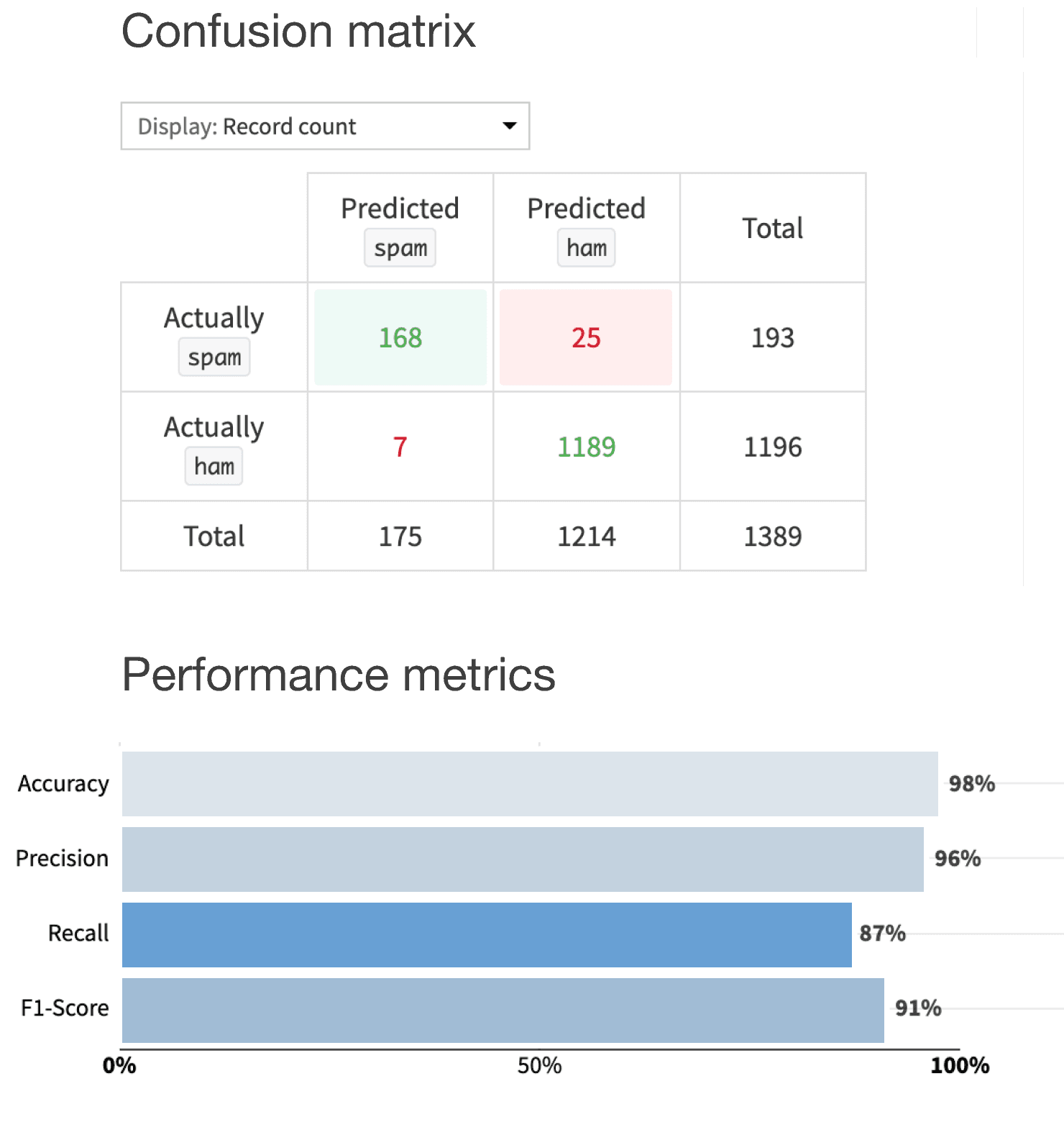

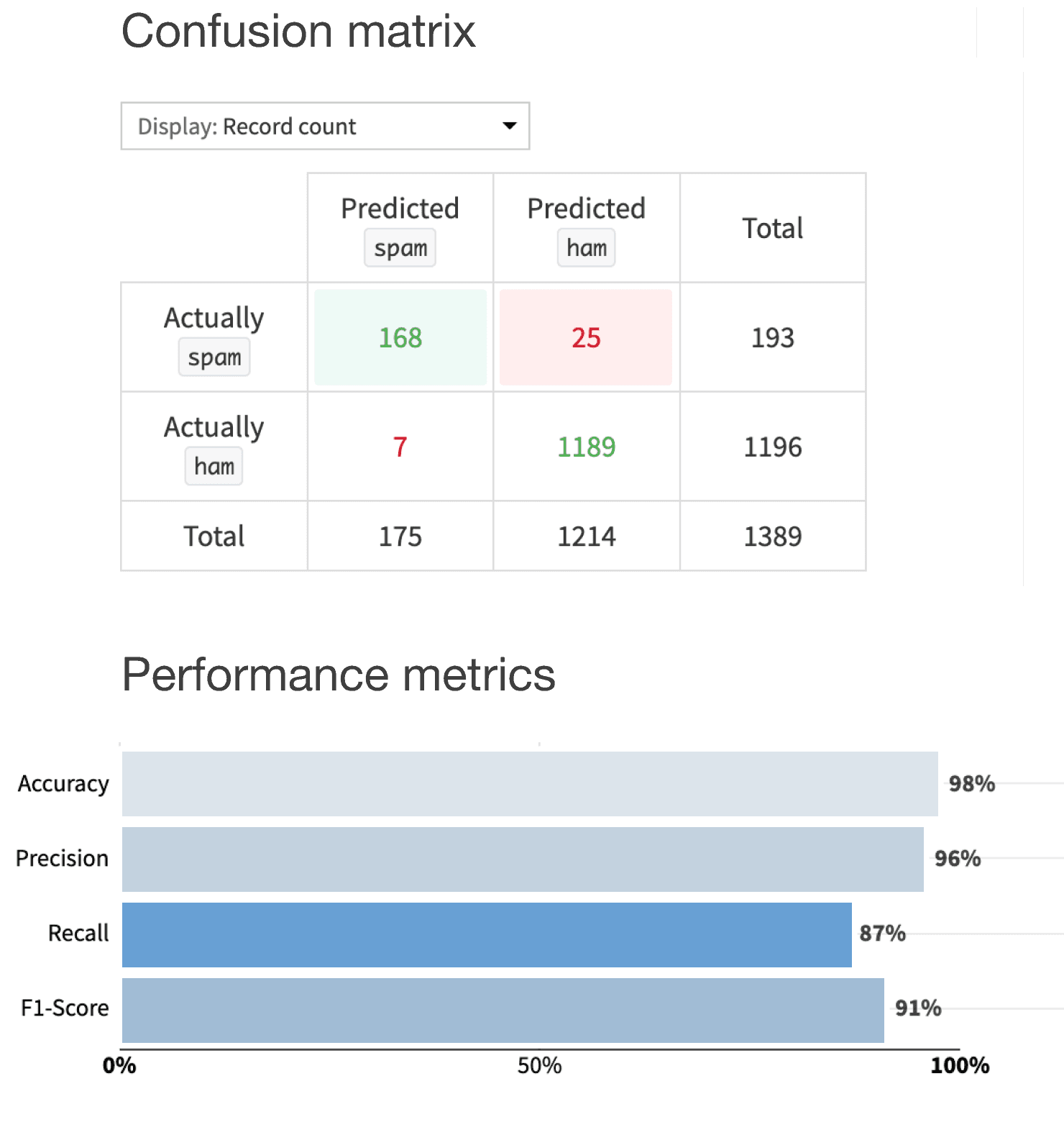

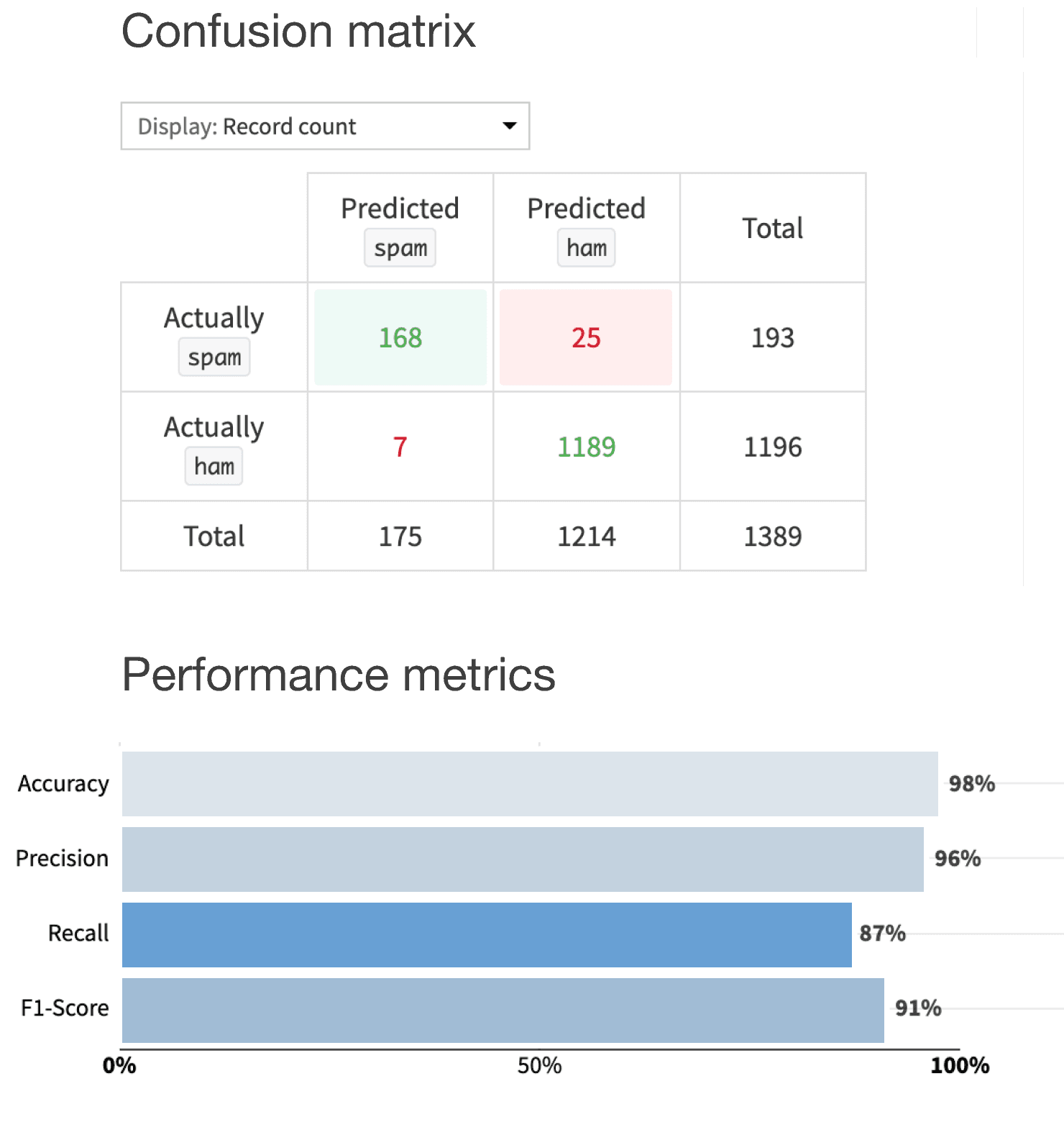

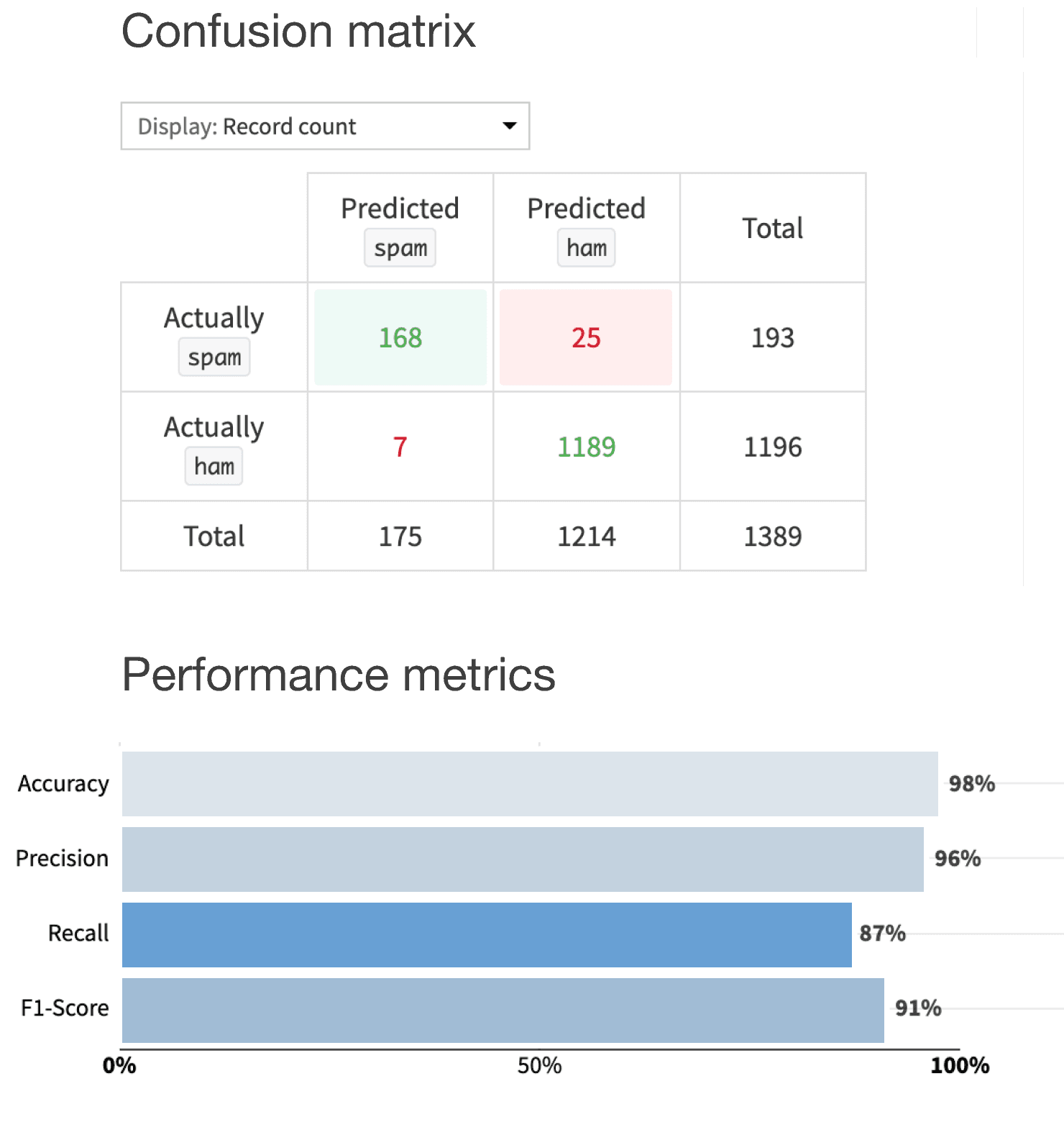

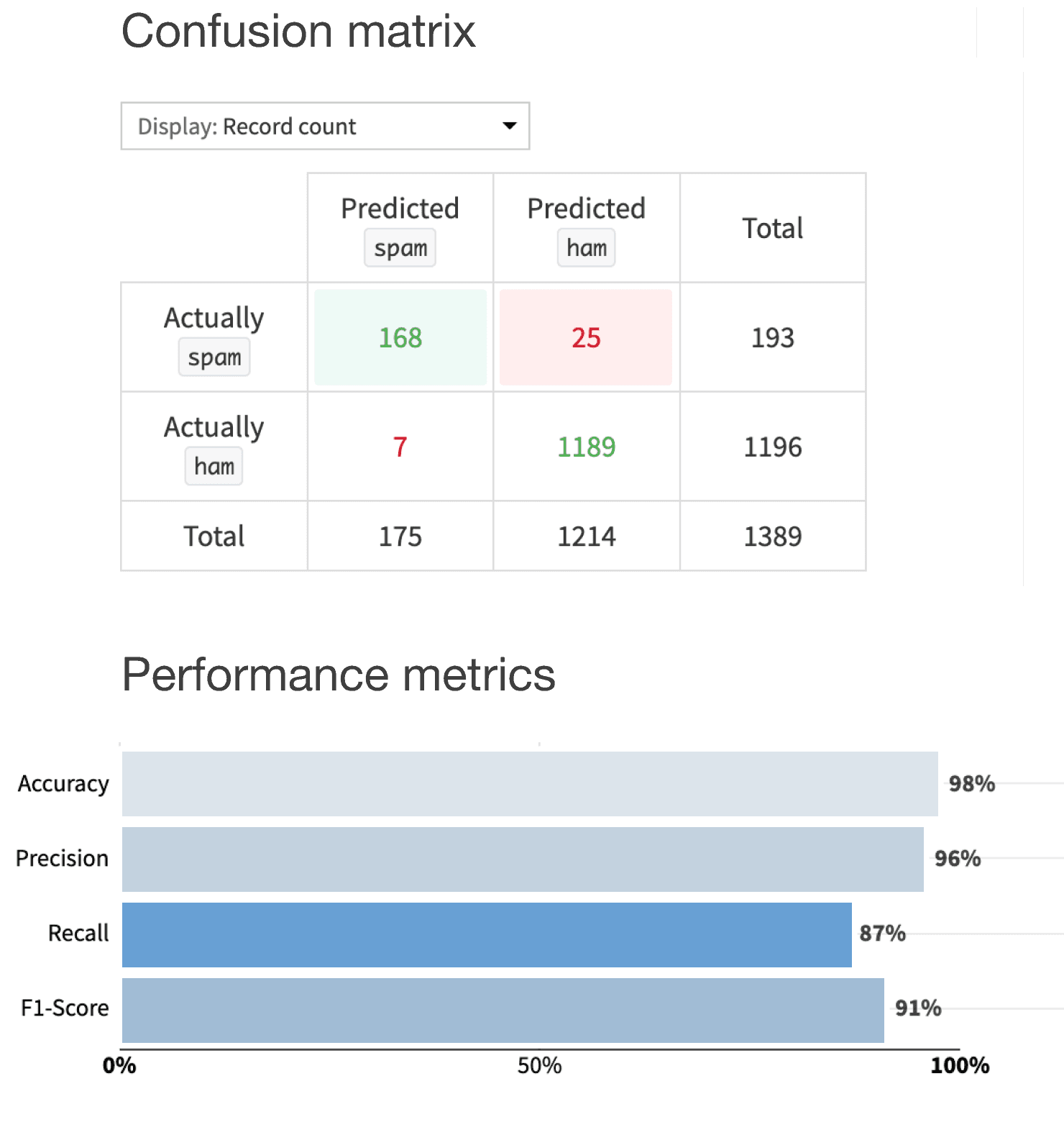

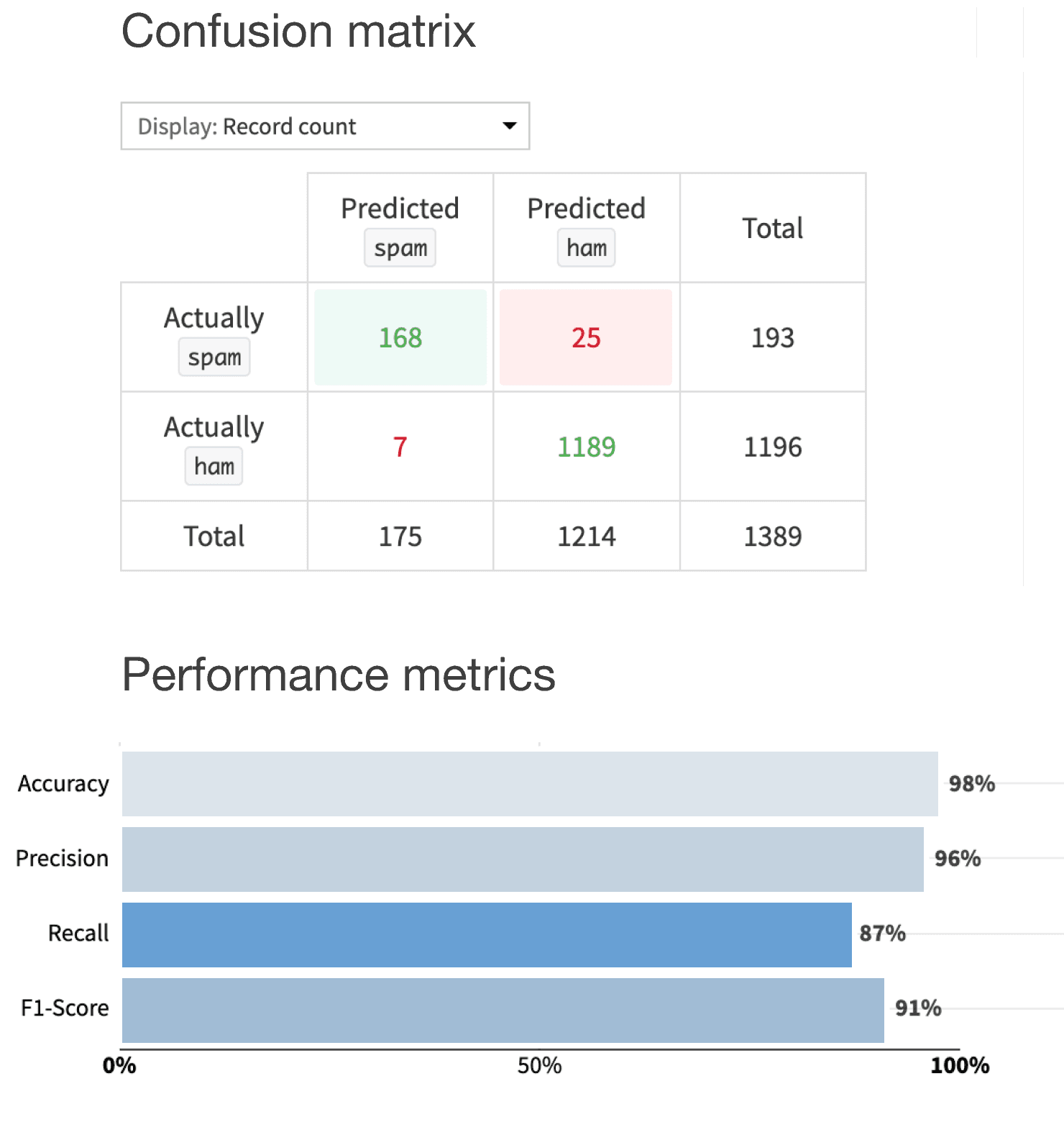

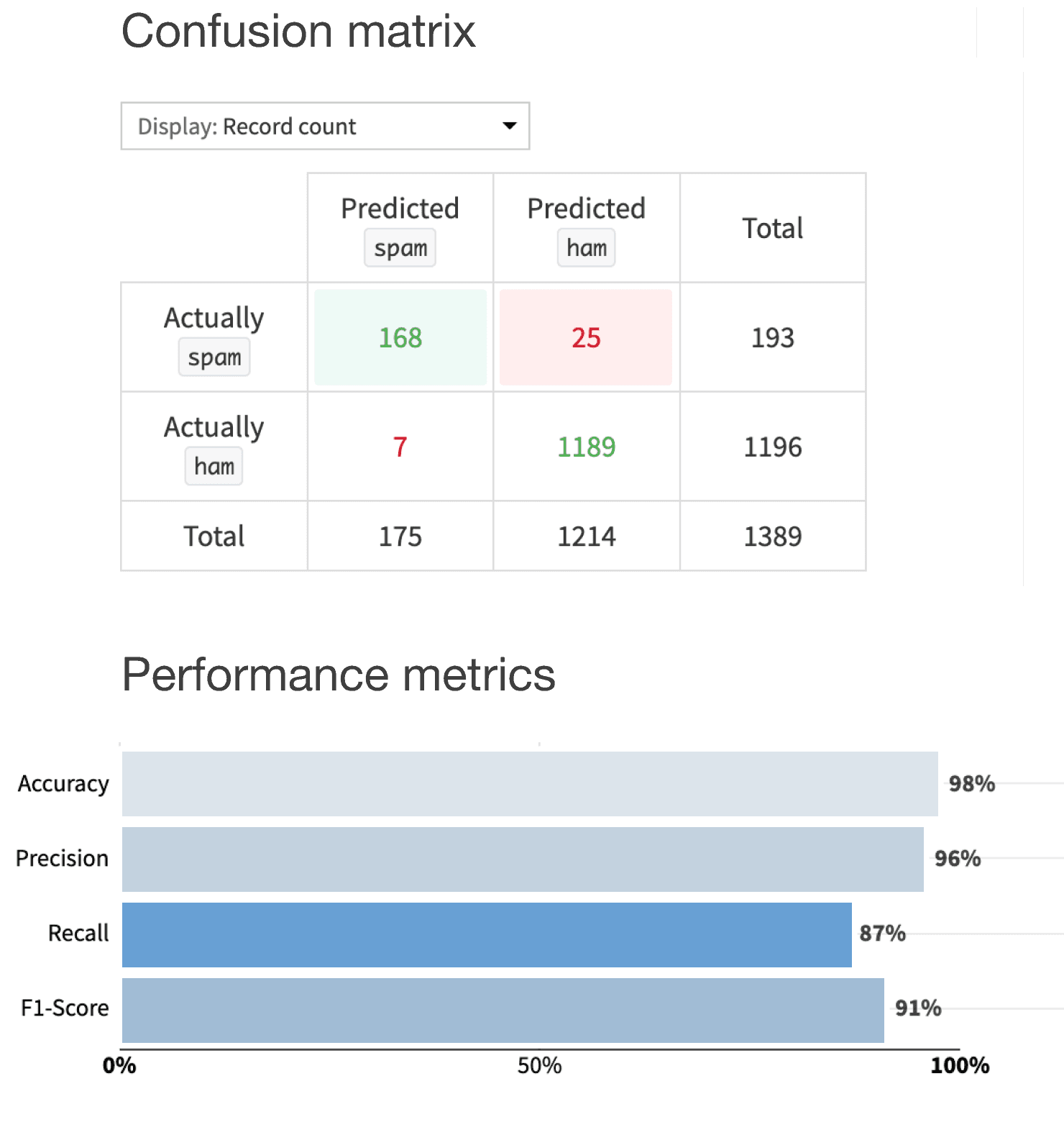

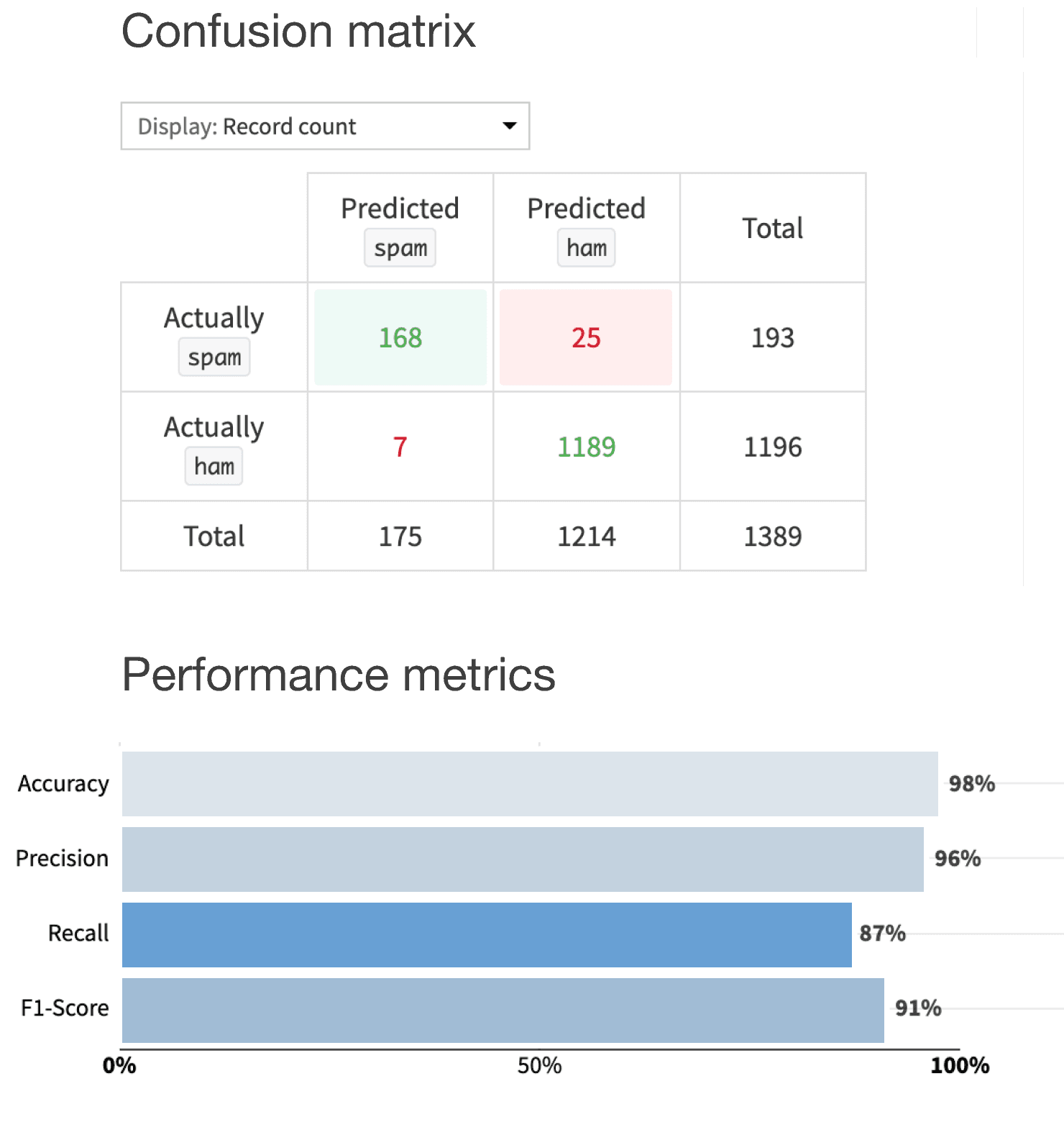

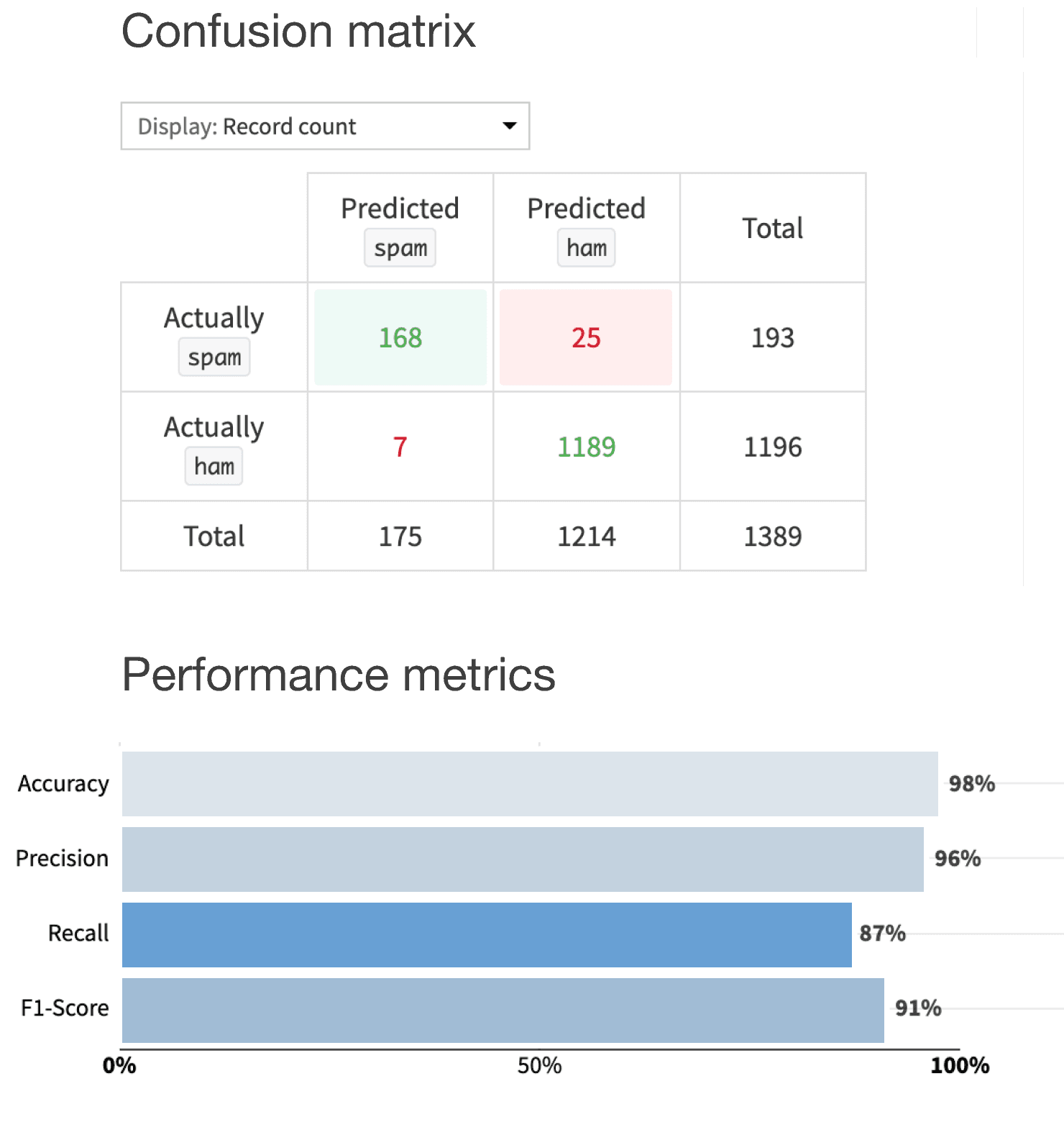

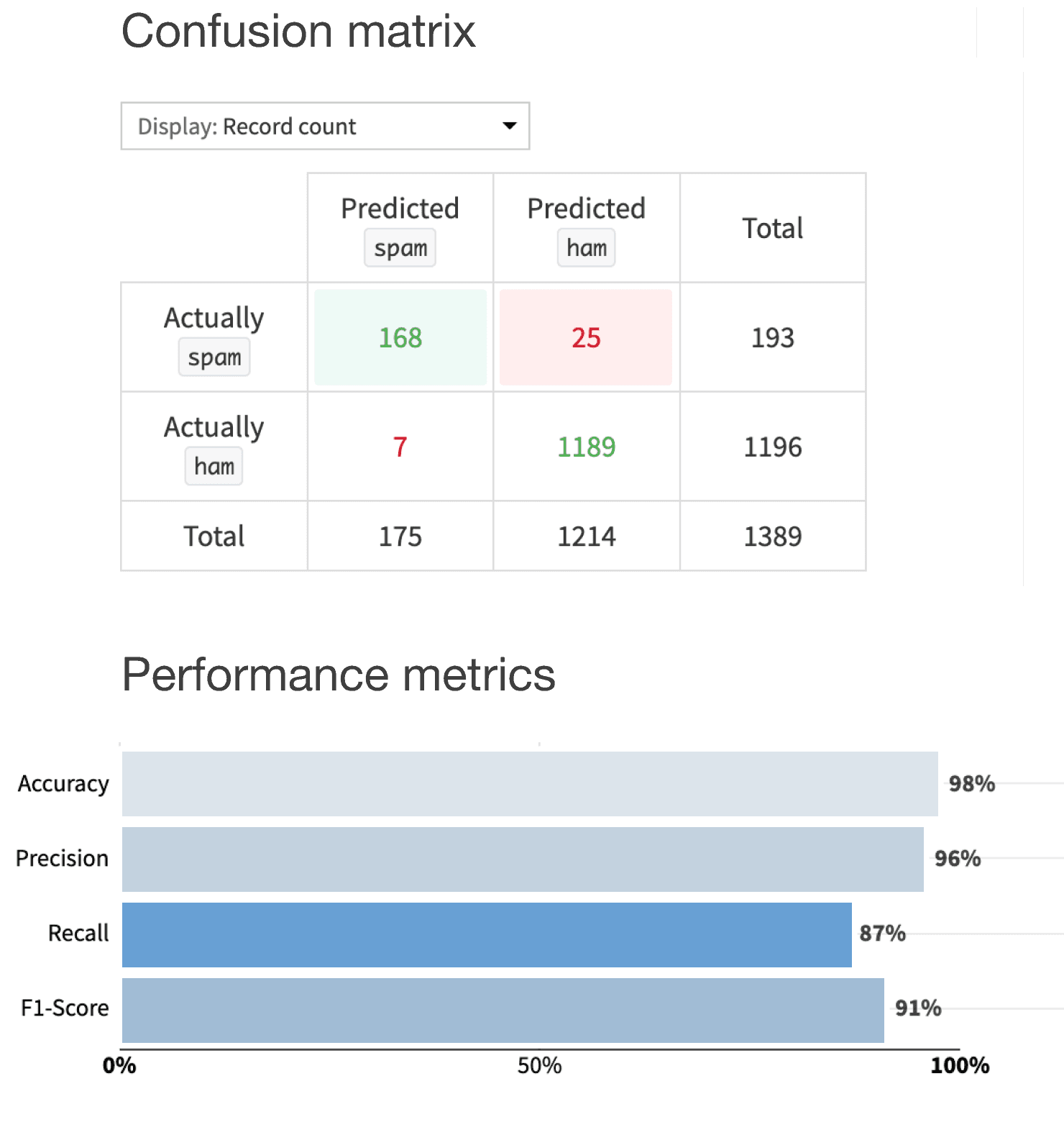

Model evaluation

3

Model choice: Chose the baseline Random Forest model for its superior performance metrics and recall, which minimizes costly missed spam detections.

Confusion matrix: Correctly identified 168 spam SMS, misidentified 25.

Performance metrics

Accuracy: 98%

F1-Score: 91%

Recall: 87%